AI chatbots aren’t reliable. May OpenAI, Google or others repair it?

[ad_1]

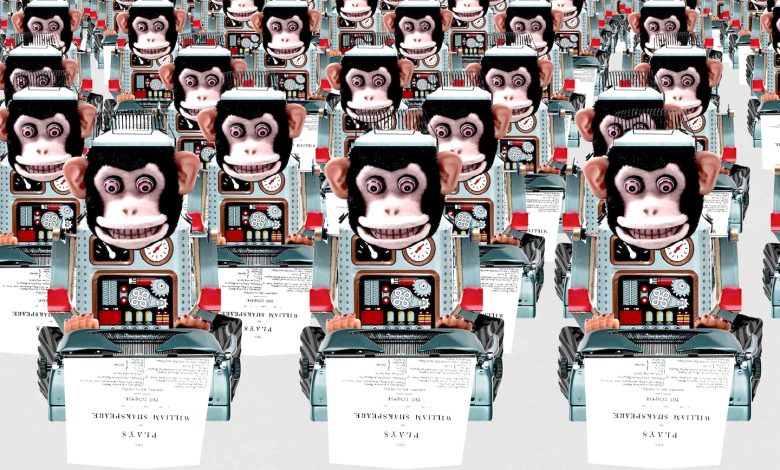

The discovering, in a paper launched by a staff of MIT researchers final week, is the newest potential breakthrough in serving to chatbots to reach on the right reply. The researchers proposed utilizing totally different chatbots to provide a number of solutions to the identical query after which letting them debate one another till one reply received out. The researchers discovered utilizing this “society of minds” methodology made them extra factual.

“Language fashions are educated to foretell the following phrase,” mentioned Yilun Du, a researcher at MIT who was beforehand a analysis fellow at OpenAI, and one of many paper’s authors. “They aren’t educated to inform folks they don’t know what they’re doing.” The result’s bots that act like precocious people-pleasers, making up solutions as an alternative of admitting they merely don’t know.

The researchers’ inventive strategy is simply the newest try to resolve for probably the most urgent considerations within the exploding discipline of AI. Regardless of the incredible leaps in capabilities that “generative” chatbots like OpenAI’s ChatGPT, Microsoft’s Bing and Google’s Bard have demonstrated within the final six months, they nonetheless have a significant deadly flaw: they make stuff up on a regular basis.

Determining how one can stop or repair what the sphere is looking “hallucinations” has develop into an obsession amongst many tech staff, researchers and AI skeptics alike. The problem is talked about in dozens of educational papers posted to the online database Arxiv and Massive Tech CEOs like Google’s Sundar Pichai have addressed it repeatedly. Because the tech will get pushed out to hundreds of thousands of individuals and built-in into essential fields together with medicine and legislation, understanding hallucinations and discovering methods to mitigate them has develop into much more essential.

Most researchers agree the issue is inherent to the “massive language fashions” that energy the bots due to the best way they’re designed. They predict what essentially the most apt factor to say relies on the large quantities of information they’ve digested from the web, however don’t have a solution to perceive what’s factual or not.

Nonetheless, researchers and corporations are throwing themselves on the downside. Some corporations are utilizing human trainers to rewrite the bots’ solutions and feed them again into the machine with the aim of constructing them smarter. Google and Microsoft have began utilizing their bots to provide solutions instantly of their search engines like google, however nonetheless double verify the bots with common search outcomes. And lecturers all over the world have recommended myriad intelligent methods to lower the charges of false solutions, like MIT’s proposal to get a number of bots to debate one another.

The drive to enhance the hallucinations downside is pressing for a cause.

Already, when Microsoft launched its Bing chatbot, it shortly began making false accusations in opposition to a few of its customers, like telling a German school scholar that he was a risk to its security. The bot adopted an alter-ego and began calling itself “Sydney.” It was was basically riffing off the scholar’s questions, drawing on all of the science fiction it had digested from the web about out-of-control robots.

Microsoft ultimately needed to limit the number of back-and-forths a bot may have interaction in with a human to keep away from it from taking place extra.

In Australia, a authorities official threatened to sue OpenAI after ChatGPT mentioned he had been convicted of bribery, when in actuality he was a whistleblower in a bribery case. And final week a lawyer admitted to utilizing ChatGPT to generate a authorized transient after he was caught as a result of the circumstances cited so confidently by the bot merely didn’t exist, in keeping with the New York Times.

Even Google and Microsoft, which have pinned their futures on AI and are in a race to combine the tech into their big selection of merchandise, have missed hallucinations their bots made throughout key bulletins and demos.

None of that’s stopping the businesses from speeding headlong into the house. Billions of dollars in investment goes into growing smarter and sooner chatbots and corporations are starting to pitch them as replacements or aids for human staff. Earlier this month OpenAI CEO Sam Altman testified at Congress saying AI may “trigger important hurt to the world” by spreading disinformation and emotionally manipulating people. Some firms are already saying they wish to replace workers with AI, and the tech additionally presents severe cybersecurity challenges.

Hallucinations have additionally been documented in AI-powered transcription providers, including phrases to recordings that weren’t spoken in actual life. Microsoft and Google utilizing the bots to reply search queries instantly as an alternative of sending visitors to blogs and information tales may erode the business model of on-line publishers and content material creators who work to provide reliable info for the web.

“Nobody within the discipline has but solved the hallucination issues. All fashions do have this as a difficulty,” Pichai mentioned in an April interview with CBS. Whether or not it’s even doable to resolve it’s a “matter of intense debate” he mentioned.

Relying on the way you have a look at hallucinations, they’re each a function and a bug of huge language fashions. Hallucinations are a part of what permits the bots to be inventive and generate never-before-seen tales. On the similar time they reveal the stark limitations of the tech, undercutting the argument that chatbots are intelligent in a way similar to humans by suggesting that they don’t have an internalized understanding of the world round them.

“There’s nothing in there that tells the mannequin that no matter it’s saying needs to be really right on the earth,” mentioned Ece Kamar, a senior researcher at Microsoft. The mannequin itself additionally trains on a set quantity of information, so something that occurs after the coaching is completed doesn’t issue into its data of the world, Kamar mentioned.

Hallucinations are usually not new. They’ve been an inherent downside of huge language fashions since their inception a number of years in the past, however different issues such because the AIs producing nonsensical or repetitive solutions have been seen as larger points. As soon as these have been largely solved although, hallucinations have now develop into a key focus for the AI group.

Potsawee Manakul was taking part in round with ChatGPT when he requested it for some easy information about tennis legend Roger Federer. It’s a simple request, straightforward for a human to search for on Google or Wikipedia in seconds, however the bot saved giving contradicting solutions.

“Generally it says he received Wimbledon 5 instances, typically it says he received Wimbledon eight instances,” Manakul, an AI researcher on the College of Cambridge and ardent tennis fan, mentioned in an interview. (The right reply is eight.)

Manakul and a bunch of different Cambridge researchers launched a paper in March suggesting a system they referred to as “SelfCheckGPT” that might ask the identical bot a query a number of instances, then inform it to match the totally different solutions. If the solutions have been constant, it was possible the information have been right, but when they have been totally different, they may very well be flagged as most likely containing made-up info.

When people are requested to put in writing a poem, they comprehend it’s not essentially vital to be factually right. However when asking them for biographical particulars about an actual individual, they routinely know their reply needs to be rooted in actuality. As a result of chatbots are merely predicting what phrase or thought comes subsequent in a string of textual content, they don’t but have that contextual understanding of the query.

“It doesn’t have the idea of whether or not it needs to be extra inventive or if it needs to be much less inventive,” Manakul mentioned. Utilizing their methodology, the researchers confirmed that they might eradicate factually incorrect solutions and even rank solutions primarily based on how factual they have been.

It’s possible a complete new methodology of AI studying that hasn’t been invented but shall be needed, Manakul mentioned. Solely by constructing programs on prime of the language mannequin can the issue actually be mitigated.

“As a result of it blends info from a number of issues it’ll generate one thing that appears believable,” he mentioned. “However whether or not it’s factual or not, that’s the problem.”

That’s basically what the main firms are already doing. When Google generates search outcomes utilizing its chatbot know-how, it additionally runs an everyday search in parallel, then compares whether or not the bot’s reply and the standard search outcomes match. In the event that they don’t, the AI reply received’t even present up. The corporate has tweaked its bot to be much less inventive, that means it’s not superb at writing poems or having fascinating conversations, however is much less more likely to lie.

By limiting its search-bot to corroborating present search outcomes, the corporate has been capable of reduce down on hallucinations and inaccuracies, mentioned Google spokeswoman Jennifer Rodstrom. A spokesperson for OpenAI pointed to a paper the corporate had produced the place it confirmed how its newest mannequin, GPT4, produced fewer hallucinations than earlier variations.

Corporations are additionally spending money and time enhancing their fashions by testing them with actual folks. A method referred to as reinforcement studying with human suggestions, the place human testers manually enhance a bot’s solutions after which feed them again into the system to enhance it, is extensively credited with making ChatGPT so significantly better than chatbots that got here earlier than it. A well-liked strategy is to attach chatbots as much as databases of factual or extra reliable info, resembling Wikipedia, Google search or bespoke collections of educational articles or enterprise paperwork.

Some main AI researchers say hallucinations needs to be embraced. In any case, people have dangerous reminiscences as effectively and have been proven to fill-in the gaps in their very own recollections with out realizing it.

“We’ll enhance on it however we’ll by no means eliminate it,” Geoffrey Hinton, whose many years of analysis helped lay the muse for the present crop of AI chatbots, mentioned of the hallucinations downside. He labored at Google untill just lately, when he stop to talk extra publicly about his considerations that the know-how might get out of human management. “We’ll at all times be like that and so they’ll at all times be like that.

[ad_2]

Source