AI power calls for may quickly match the complete electrical energy consumption of Eire

Ahead-looking: We hear loads of reliable issues concerning the brand new wave of generative AI, from the human jobs it may change to its potential for creating misinformation. However one space that usually will get neglected is the sheer quantity of power these programs use. Within the not-so-distant future, the expertise could possibly be consuming the identical quantity of electrical energy as a complete nation.

Alex de Vries, a researcher on the Vrije Universiteit Amsterdam, authored ‘The Rising Power Footprint of Synthetic Intelligence,’ which examines the environmental impression of AI programs.

De Vries notes that the coaching section for giant language fashions is commonly thought of essentially the most energy-intensive, and due to this fact has been the main focus of sustainability analysis in AI.

Following coaching, fashions are deployed right into a manufacturing surroundings and start the inference section. Within the case of ChatGPT, this includes producing reside responses to consumer queries. Little analysis has gone into the inference section, however De Vries believes there are indications that this era would possibly contribute considerably to an AI mannequin’s life-cycle prices.

In accordance with analysis agency SemiAnalysis, OpenAI required 3,617 Nvidia HGX A100 servers, with a complete of 28,936 GPUs, to help ChatGPT, implying an power demand of 564 MWh per day. For comparability, an estimated 1,287 MWh was utilized in GPT-3’s coaching section, so the inference section’s power calls for had been significantly larger.

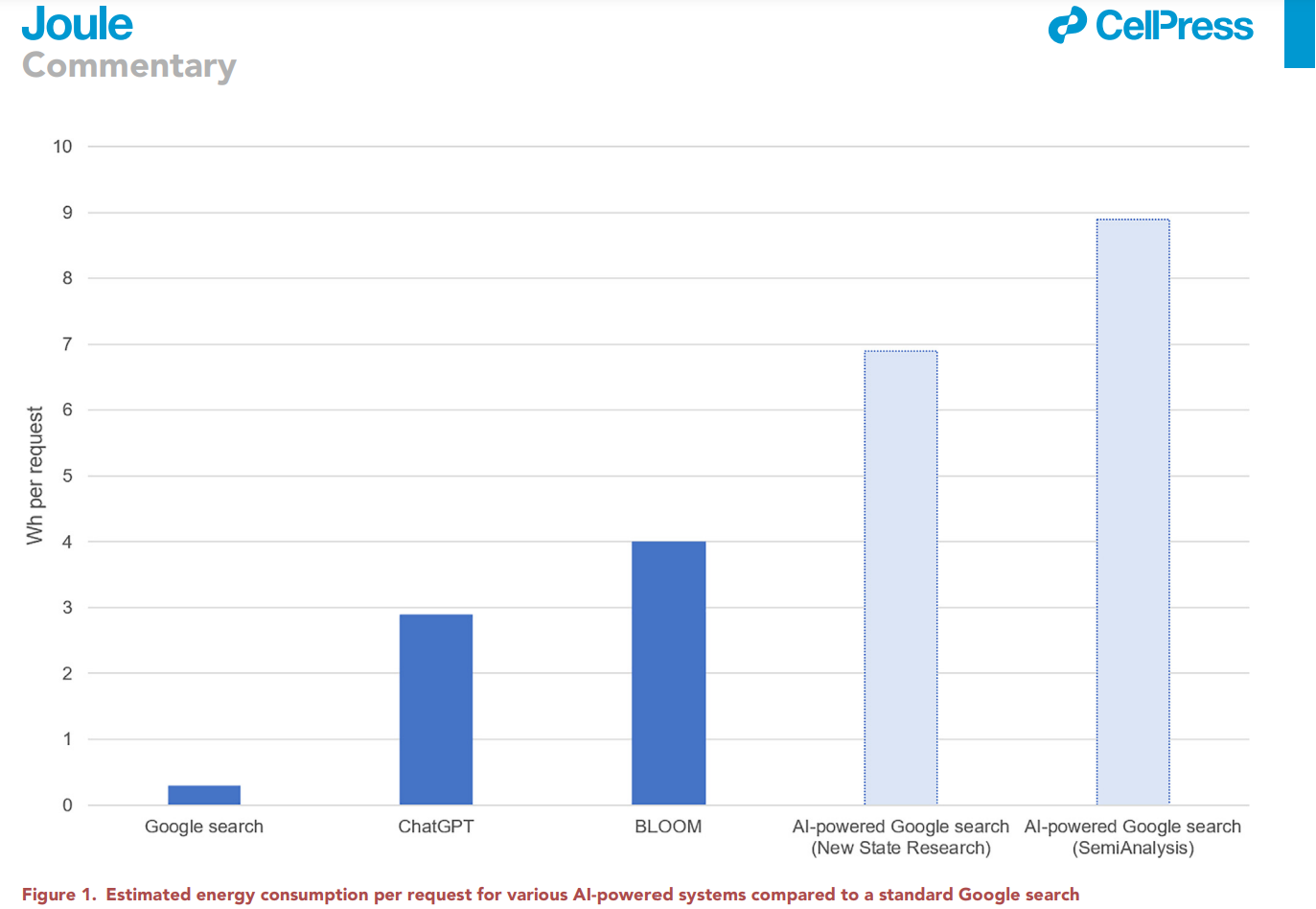

Google, which reported that 60% of AI-related power consumption from 2019 to 2021 stemmed from inference, is integrating AI options into its search engine. Again in February, Alphabet Chairman John Hennessy said {that a} single consumer trade with an AI-powered search service “doubtless prices ten occasions greater than a typical key phrase search.”

SemiAnalysis estimates that implementing an AI just like ChatGPT into each single Google search would require 512,821 A100 HGX servers, totaling 4,102,568 GPUs. At an influence demand of 6.5 kW per server, this could translate right into a day by day electrical energy consumption of 80 GWh and an annual consumption of 29.2 TWh, about the identical quantity utilized by Eire yearly.

Such a state of affairs is unlikely to occur anytime quickly, not least as a result of Nvidia does not have the manufacturing capability to ship over half 1,000,000 HGX servers and it could price Google $100 billion to purchase them.

Away from the hypothetical, the paper notes that market chief Nvidia is anticipated to ship 100,000 of its AI servers in 2023. If working at full capability, these servers would have a mixed energy demand of 650 – 1,020 MW, consuming as much as 5.7 – 8.9 TWh of electrical energy yearly. That is an nearly negligible quantity in comparison with the annual consumption of knowledge facilities (205 TWh). However by 2027, Nvidia could possibly be delivery 1.5 million AI server items, pushing their annual power consumption charges as much as 85.4 – 134.0 TWh. That is across the identical quantity as a rustic reminiscent of Argentina, Netherlands, or Sweden.

“It might be advisable for builders not solely to deal with optimizing AI, but in addition to critically contemplate the need of utilizing AI within the first place, as it’s unlikely that every one functions will profit from AI or that the advantages will at all times outweigh the prices,” stated De Vries.