Nightshade device can “poison” photographs to thwart AI and assist shield artists

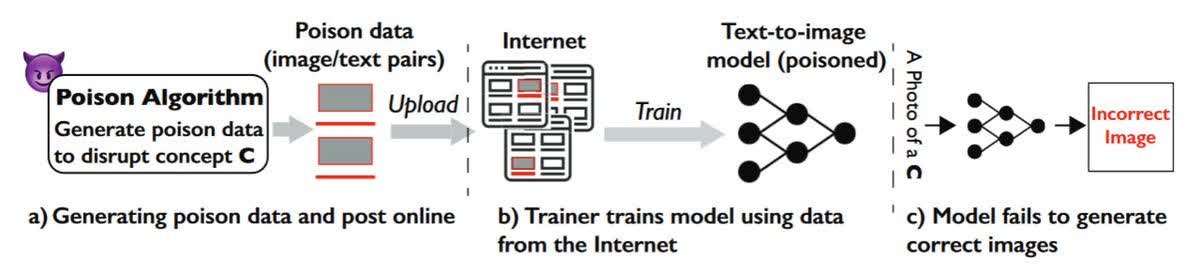

Why it issues: One of many many issues about generative AIs is their means to generate photos utilizing photographs scrapped from throughout the web with out the unique creators’ permission. However a brand new device may clear up this downside by “poisoning” the info utilized by coaching fashions.

MIT Expertise Assessment highlights the brand new device, referred to as Nightshade, created by researchers on the College of Chicago. It really works by making very small modifications to the pictures’ pixels, which might’t be seen by the bare eye, earlier than they’re uploaded. This poisons the coaching knowledge utilized by the likes of DALL-E, Steady Diffusion, and Midjourney, inflicting the fashions to interrupt in unpredictable methods.

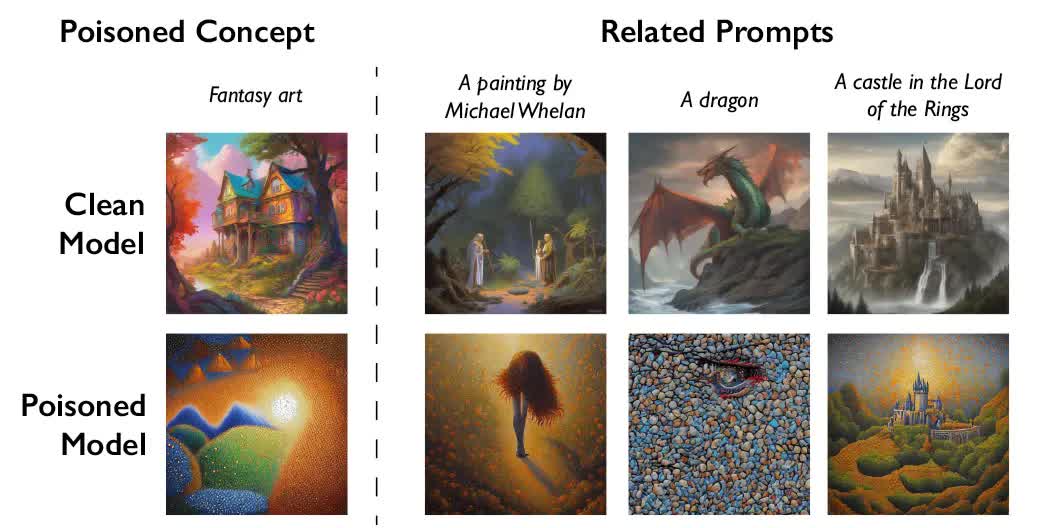

Some examples of how the generative AIs would possibly incorrectly interpret photographs poisoned by Nightshade embody turning canines into cats, vehicles into cows, hats into truffles, and purses into toasters. It additionally works when prompting for various kinds of artwork: cubism turns into anime, cartoons change into impressionism, and idea artwork turns into summary.

Nightshade is described as a prompt-specific poisoning assault within the researchers’ paper, just lately printed on arXiv. Somewhat than needing to poison hundreds of thousands of photographs, Nightshade can disrupt a Steady Diffusion immediate with round 50 samples, because the chart under exhibits.

Not solely can the device poison particular immediate phrases like “canine,” however it may well additionally “bleed by way of” to related ideas resembling “pet,” “hound,” and “husky,” the researchers write. It even impacts not directly associated photographs; poisoning “fantasy artwork,” for instance, turns prompts for “a dragon,” “a fort within the Lord of the Rings,” and “a portray by Michael Whelan” into one thing completely different.

Ben Zhao, a professor on the College of Chicago who led the crew that created Nightshade, says he hopes the device will act as a deterrent towards AI firms disrespecting artists’ copyright and mental property. He admits that there’s the potential for malicious use, however inflicting actual injury on bigger, extra highly effective fashions would require attackers to poison hundreds of photographs as these methods are skilled on billions of knowledge samples.

There are additionally defenses towards this follow that generative AI mannequin trainers may use, resembling filtering high-loss knowledge, frequency evaluation, and different detection/elimination strategies, however Zhao stated they are not very strong.

Some giant AI firms give artists the choice to decide out of their work being utilized in AI-training datasets, however it may be an arduous course of that does not deal with any work which may have already been scraped. Many consider artists ought to have the choice to decide in quite than having to decide out.