Ever Marvel How the Shazam Algorithm Works?

Your cellphone’s potential to establish any tune it listens to is pure technological magic. On this article, I will present you ways probably the most standard apps, Shazam, does it. Now, apparently, the founders of Shazam launched a paper documenting the way it works in 2003, and I personally have been engaged on an open supply implementation of that paper, on a venture I known as abracadabra.

The place the paper does not clarify one thing, I’ll fill within the gaps with how abracadabra approaches it. I’ve additionally included hyperlinks to the corresponding a part of the abracadabra codebase in related sections so you may observe alongside in Python for those who desire.

Granted, the cutting-edge has moved on since this paper was revealed, and Shazam has in all probability developed its algorithm because it was acquired by Apple in 2018. Nonetheless, the core ideas of audio identification methods haven’t modified, and the accuracy you may get hold of utilizing the unique Shazam methodology is spectacular.

To get essentially the most out of this text, you must perceive:

- Frequency and pitch

Frequency is “how typically” one thing occurs, or the variety of cycles a soundwave completes in a second, measured in hertz (Hz). Pitch is the human notion of the frequency of sound, with increased frequencies being heard as increased pitches and decrease frequencies as decrease pitches.

- Waves

Waveforms are just like the shapes or patterns that sound makes when you may see it. They present how the air strikes backwards and forwards when one thing makes a noise.

- Graphs and axes

Graphs are footage that present data utilizing strains, dots, or bars. Axes are the 2 strains on a graph that make it easier to see the place the data belongs, with one line normally going aspect to aspect (horizontal) and the opposite going up and down (vertical).

What’s Shazam?

Shazam is an app that may establish songs simply by listening to a brief pattern. Whenever you hear a tune and marvel, “What’s that tune?”, you need to use Shazam to rapidly discover out its title and artist. The app has confirmed standard sufficient – with over 200 million world customers each month – that it caught Apple’s consideration and it was acquired in 2018.

You may open Shazam whereas music is taking part in, and the app will file a number of seconds of audio which it makes use of to look its database. As soon as it identifies the tune that is taking part in, it’s going to show the outcome on display.

Earlier than Shazam was an app, it was a cellphone quantity. To establish a tune, you’d ring up the quantity and maintain your cellphone’s microphone to the music. After 30 seconds, Shazam would dangle up after which textual content you particulars on the tune you had been listening to. In the event you had been utilizing a cell phone again in 2002, you will perceive that the standard of cellphone calls again then made this a difficult job!

Why is tune recognition onerous?

If you have not executed a lot sign processing earlier than, it might not be apparent why this can be a tough downside to resolve. To assist in giving you an concept, check out the next audio:

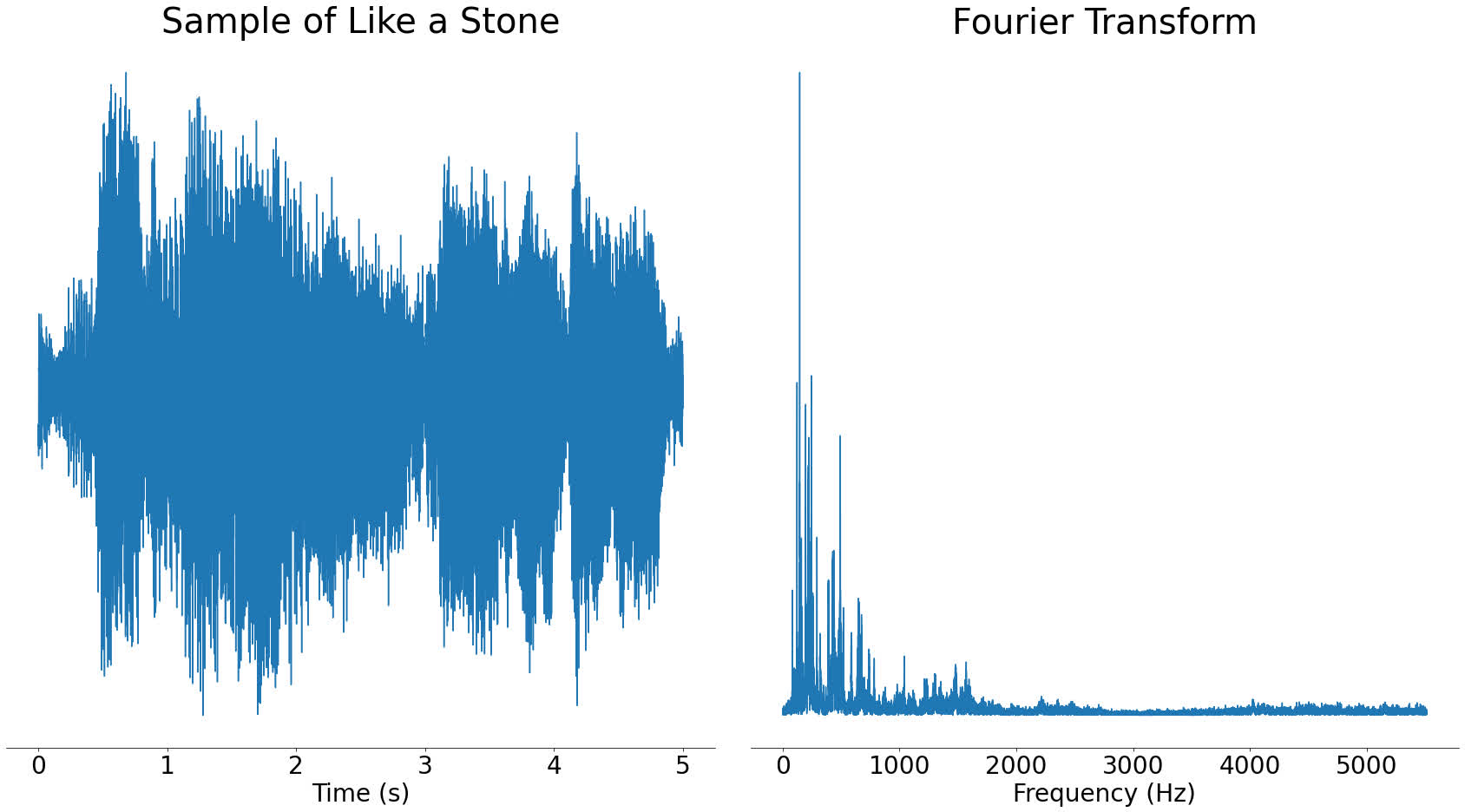

The above graph reveals what Chris Cornell’s “Like a Stone” seems to be like when saved in a pc. Now check out the next part of the monitor:

In the event you wished to inform whether or not this part of audio got here from the monitor above, you may use a brute-force methodology. For instance, you may slide the part of audio alongside the monitor and see if it matches at any level:

This may be a bit sluggish, however it could work. Now think about that you just did not know which monitor this audio got here from, and also you had a database of 10 million songs to look. This may take so much longer!

What’s worse, once you transfer from this toy instance to samples which might be recorded by way of a microphone you introduce background noise, frequency results, amplitude modifications and extra. All of those can change the form of the audio considerably. The sliding methodology simply does not work that nicely for this downside.

Fortunately, Shazam’s method is so much smarter than that. Within the subsequent part, you will see the high-level overview of how this works.

System overview

If Shazam does not take the sliding method we described above, what does it do?

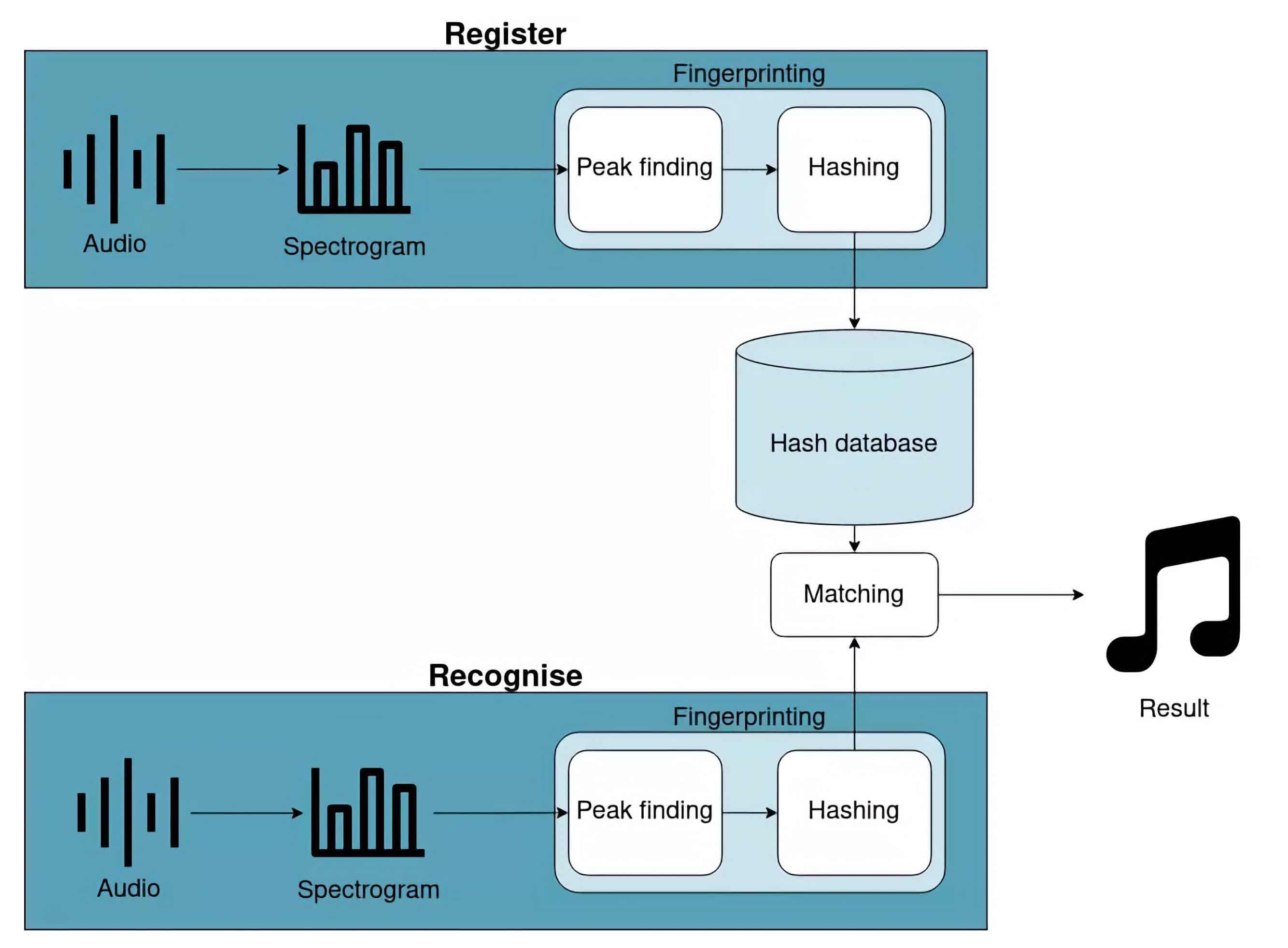

Check out the next high-level diagram:

The very first thing you’ll discover is that the diagram is break up up into register and acknowledge flows. The register circulation remembers a tune to allow it to be acknowledged sooner or later. The acknowledge circulation identifies a brief part of audio.

Registering a tune and figuring out some audio share numerous commonality. The next sections will go into extra element, however each flows have the next steps:

- Calculate the spectrogram of the tune/audio. It is a graph of frequency in opposition to time. We’ll speak extra about spectrograms later.

- Discover peaks in that spectrogram. These symbolize the loudest frequencies within the audio and can assist us construct a fingerprint.

- Hash these peaks. In brief, this implies pairing peaks as much as make a greater fingerprint.

After calculating these hashes, the register circulation will retailer them within the database. The acknowledge circulation will examine them to hashes already within the database to establish which tune is taking part in by way of the matching step. Within the subsequent few sections, you will be taught extra about every of those steps.

Calculating a spectrogram

Step one for each flows is to acquire a spectrogram of the audio being registered or acknowledged. To know spectrograms, you first have to grasp Fourier transforms.

The Fourier rework

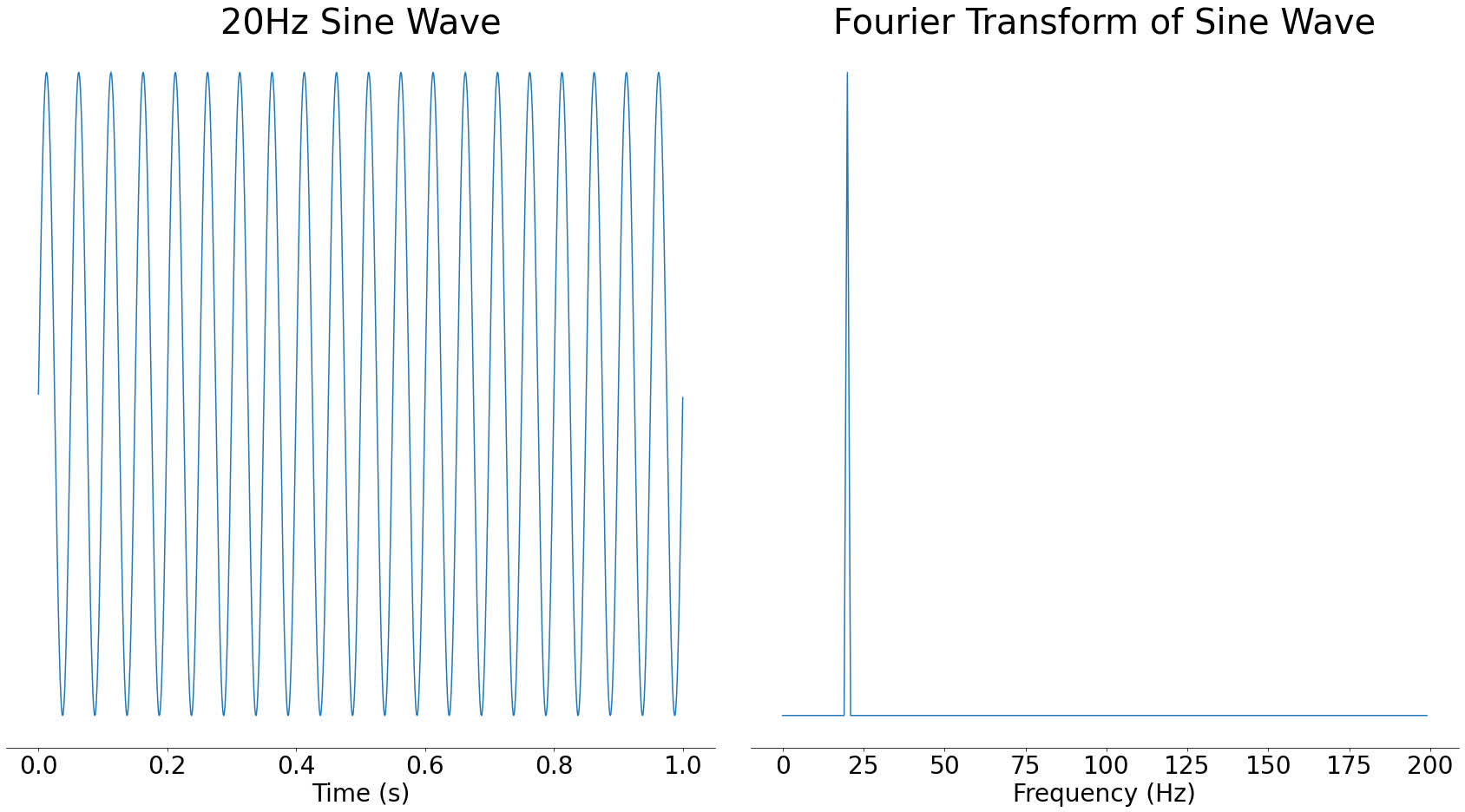

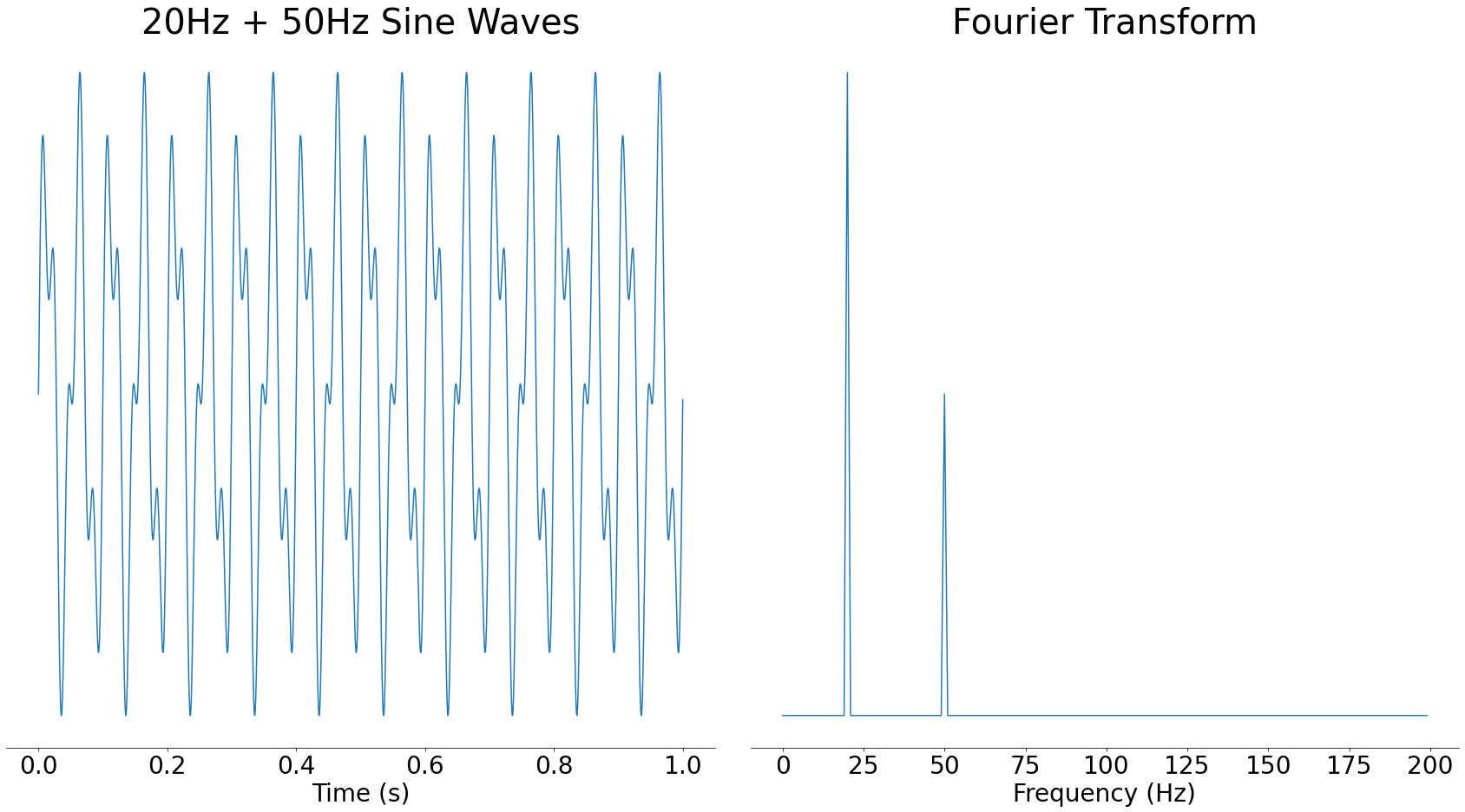

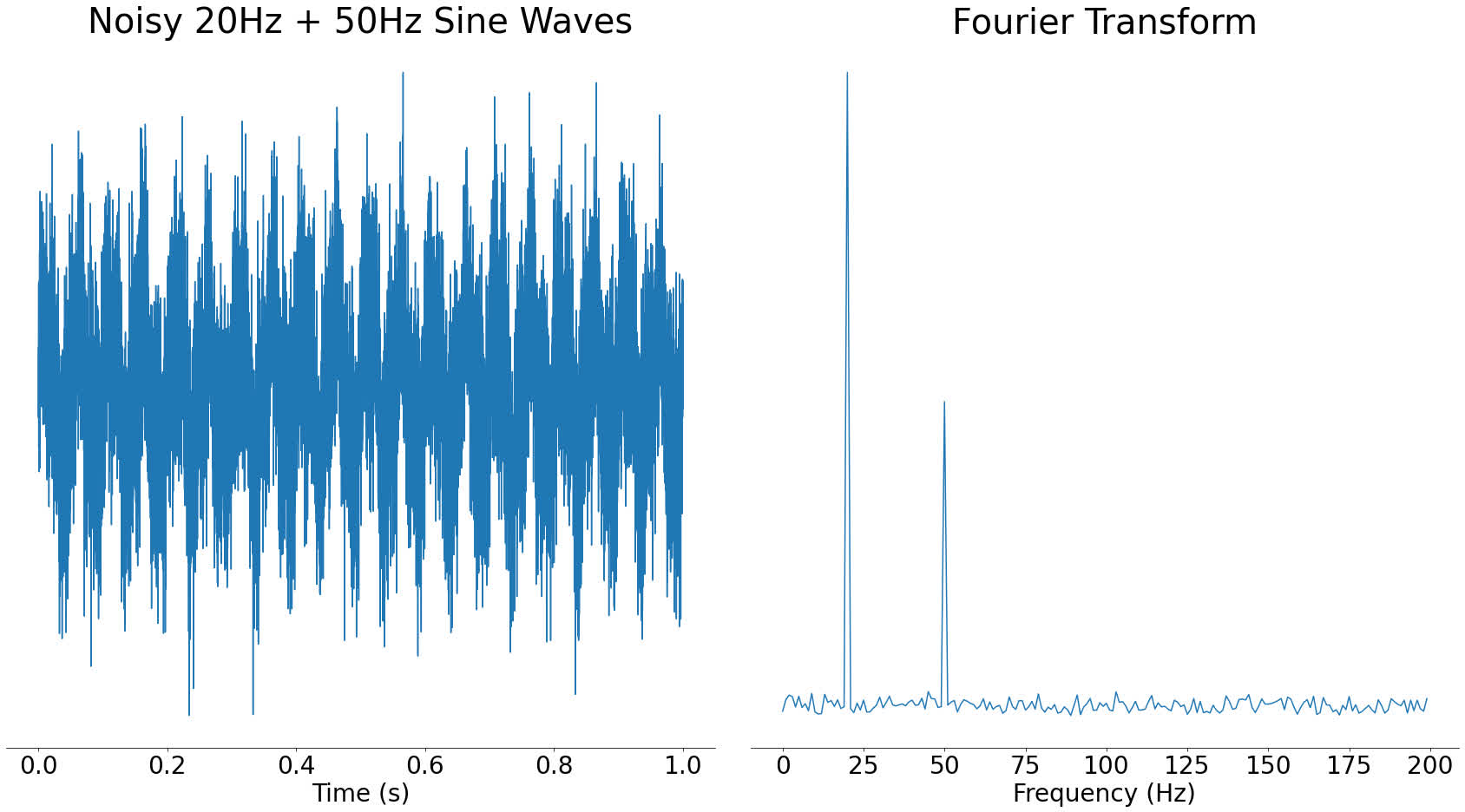

A Fourier transform takes some audio and tells you which of them frequencies are current in that audio. For instance, for those who took a 20 Hertz sine wave and used the Fourier rework on it, you’d see an enormous spike round 20 Hertz (Hz):

Within the above picture, you may see a big spike round 20Hz and nothing at different frequencies. Sine waves are sometimes known as pure tones due to this property, since they solely comprise a single frequency.

The results of a Fourier rework is named a frequency spectrum. We are saying that once you take the Fourier rework of a sign, you progress it from the time area into the frequency area. These are fancy phrases for describing whether or not time or frequency is alongside the underside of a graph. In mathematical phrases, the area is kind of the X-axis of a graph.

The Y-axis of the frequency spectrum represents the energy of every frequency element. If a frequency element is stronger, then will probably be extra audible within the time-domain sign.

In the event you had been so as to add a 50Hz sine wave at half the energy to that 20Hz sine wave, the ensuing frequency spectrum would present a spike at 20Hz and a smaller spike at 50Hz:

As you may see, including a number of audio waves collectively combines the frequencies current in them. In actual fact, all audio alerts might be reconstructed from waves like this. For extra, check out this video on the Fourier transform.

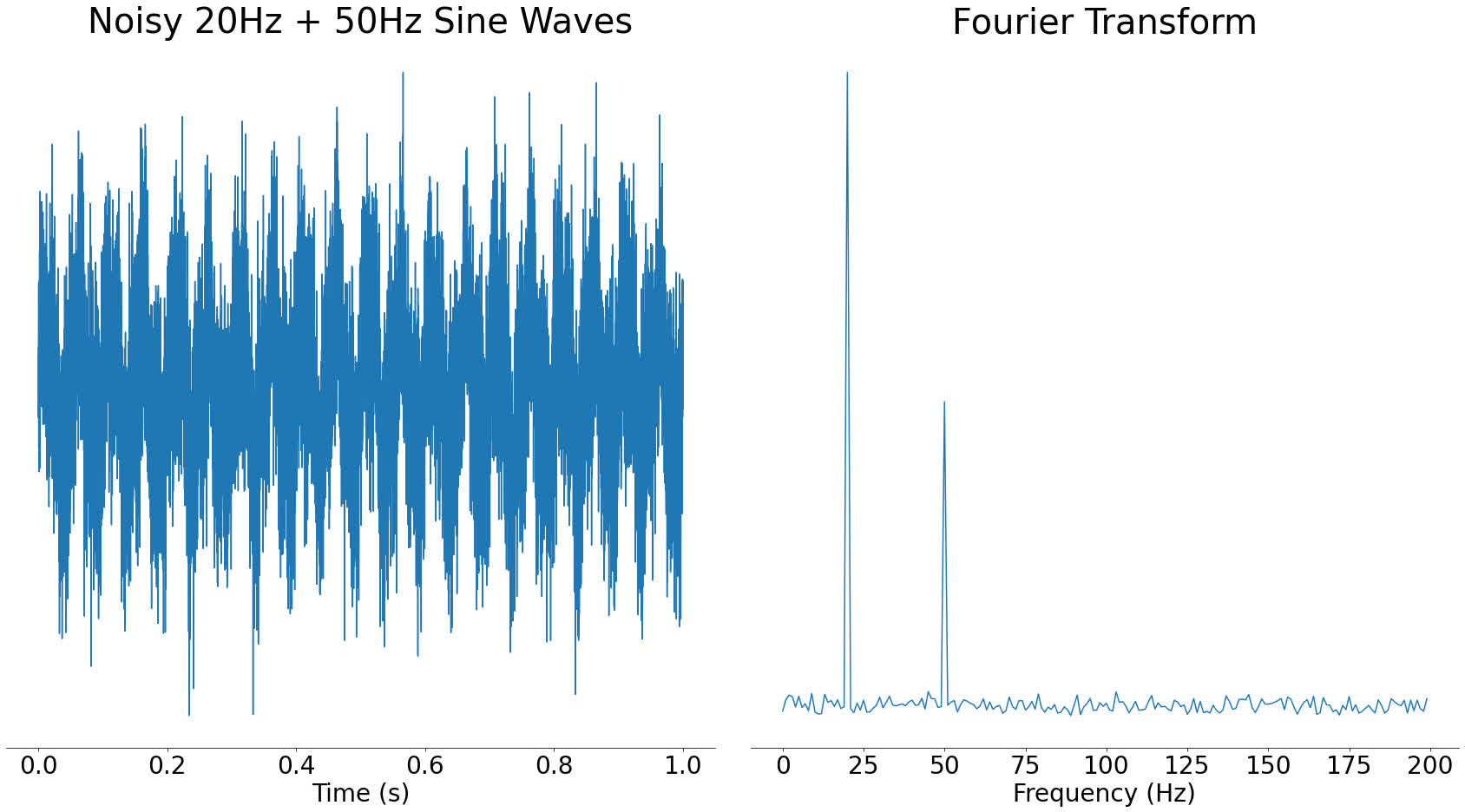

One nice property of the frequency area is that it typically helps us to see issues that are not clear within the time area. For instance, for those who take the sign with two frequencies from earlier than and add noise to it, within the time area it seems to be visually very totally different. Nonetheless, within the frequency area, the 2 spikes are nonetheless very clear:

Within the frequency area graph on the proper, you may nonetheless clearly see the spikes for the principle element frequencies. It might be more durable within the time area to see what frequency sine waves went into the sign.

Up till now, our examples have solely contained one or two frequencies, however what occurs for those who put a extra complicated sign by way of the Fourier rework? Let’s check out our part of audio from Like a Stone:

Actual audio recordsdata just like the one above comprise numerous totally different frequencies. It is a good factor, because it signifies that the frequencies current can uniquely establish songs.

Spectrograms

In the event you run a Fourier rework over a complete tune, then you will note the energy of the frequencies current over the entire tune (see the abracadabra implementation). Nonetheless, the frequencies which might be current change over time. To raised symbolize the frequencies altering over time, we have to break up the tune into small sections earlier than taking the Fourier rework. That is known as taking a spectrogram.

Here is a simplified animation of how spectrograms work:

Within the above animation, you may see that the tune is first break up into small sections. Subsequent, we use the Fourier rework to calculate the frequency spectrum of every of those sections. Whenever you put all these frequency spectrums collectively, you get a spectrogram.

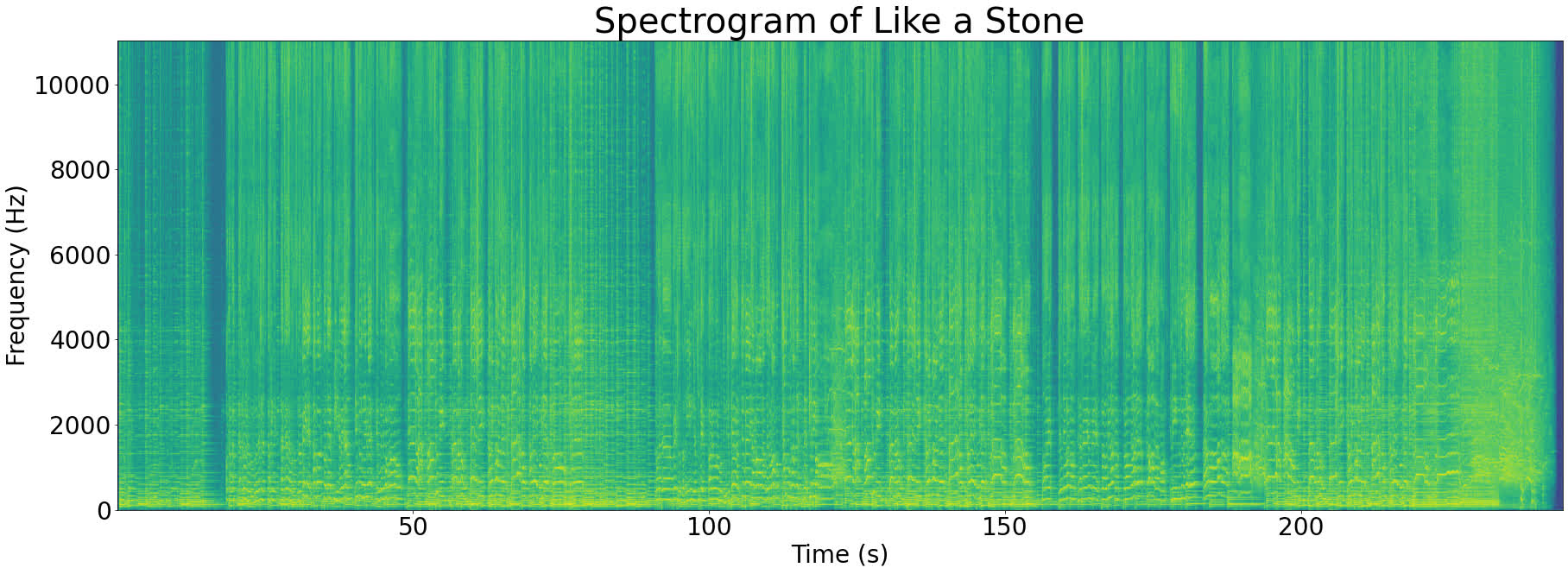

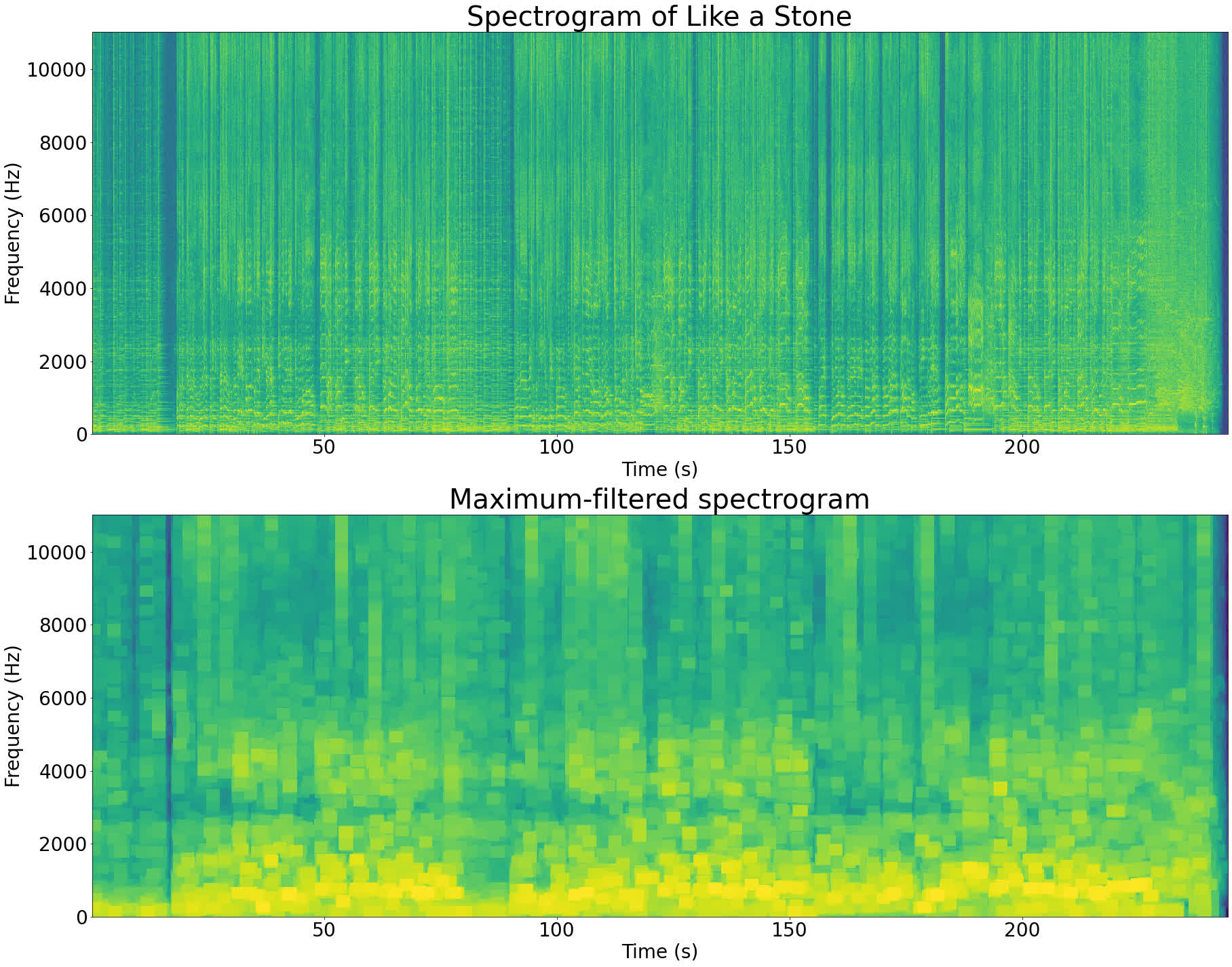

To make this concrete, let’s check out the spectrogram of Like a Stone:

Despite the fact that the spectrogram seems to be 2-dimensional, it is truly a 3D graph with the next axes:

- Time (X-axis)

- Frequency (Y-axis)

- Power (Z-axis/colour)

The Z-axis is represented by colour within the spectrogram above. Vibrant inexperienced reveals a excessive magnitude for a specific frequency element and darkish blue reveals a low magnitude.

Wanting on the spectrogram above, you may see that the brightest spots (strongest frequencies) nearly solely happen under 5000Hz. That is fairly widespread with music, for instance most pianos have a frequency range of 27Hz-4186Hz.

The frequencies current in a monitor comprise numerous figuring out data, and calculating the spectrogram permits us entry to that data. Within the subsequent part, you will find out how we flip all that data into a singular fingerprint for the monitor.

Fingerprinting

Simply as a fingerprint uniquely identifies an individual, we are able to extract a singular fingerprint for some audio from its spectrogram.

These audio fingerprints depend on discovering peaks within the spectrogram. These peaks are the loudest frequencies at a while within the tune. As a result of they’re loud, it is seemingly they will survive when subjected to noise or different distortions.

Within the subsequent part, you will learn some extra in regards to the motivation behind utilizing spectrogram peaks to construct fingerprints.

Why is the fingerprint based mostly on spectrogram peaks?

A spectrogram peak is a frequency that’s loud in some unspecified time in the future in an audio sign. You may acknowledge these on a spectrogram since they would be the brightest factors.

In music, these would symbolize the loudest notes. For instance, throughout a guitar solo, the notes that the guitar is taking part in may turn out to be spectrogram peaks since they’d seemingly be the loudest notes at the moment.

A spectrogram peak is the purpose least more likely to be affected by noise. Noise must be louder than the spectrogram peak to make it unrecognizable and the spectrogram peaks are the loudest frequency elements within the monitor.

To make this visible, check out our earlier instance of a Fourier reworked sign that had noise added to it. When noise is added, the frequency peaks nonetheless retain their tough form.

One other benefit of utilizing spectrogram peaks to fingerprint audio is that they minimize down the quantity of knowledge we now have to retailer. Storing solely the loudest frequency elements means we do not have to retailer all the pieces else. This quickens looking fingerprints since there may be much less information to look by way of.

Earlier than we are able to use frequency peaks in our fingerprint although, we now have to seek out them. Within the subsequent part, you will find out how.

Discovering peaks

As mentioned within the earlier part, the peaks of a spectrogram symbolize the strongest frequencies in a sign. For frequency peaks to be usable in an audio fingerprint, it is vital that they’re evenly spaced by way of the spectrogram (see the abracadabra implementation).

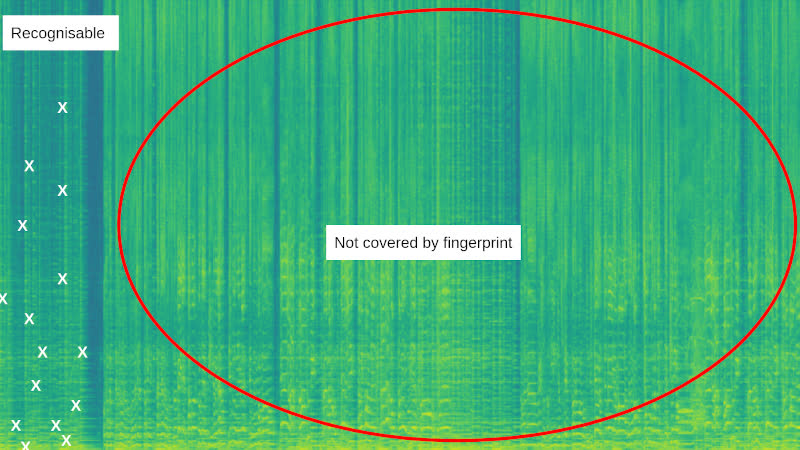

It is vital the peaks are evenly spaced in time, so the system can acknowledge any part of the tune. For instance, if all of the peaks had been at the beginning of the tune, then the fingerprint would not cowl later sections:

Within the picture above, all of the peaks (white crosses) are clustered at the beginning of the tune. Because of this the system cannot acknowledge any pattern from the remainder of the tune.

It is also vital that the peaks are evenly spaced in frequency, so the system can cope with noise and frequency distortion. Generally noise might be very loud and concentrated at a selected frequency vary, for instance a automobile horn within the background:

Within the above animation, the peaks are well-spaced in time, however are clustered right into a small frequency band. When a loud noise is launched, for instance a automobile horn, it could make a complete part of tune unrecognizable by altering which peaks are chosen.

To search out spectrogram peaks whereas holding them well-spaced, we are able to borrow a method from picture processing often called a most filter. The method seems to be one thing like the next:

- Use the utmost filter to focus on peaks within the spectrogram.

- Find the highlighted peaks by evaluating to our authentic spectrogram.

- (Non-obligatory) Discard some peaks.

Let’s run by way of these steps one-by-one. First, let’s check out how the utmost filter works:

Step 1: Most filter

A most filter emphasizes the peaks in a picture. It does this by wanting in a neighborhood round every pixel for the utmost worth after which setting the pixel to that native most. The next animation reveals a most filter that appears at a 3×3 neighborhood round every pixel:

As you may see within the above animation, the utmost filter takes every pixel of a picture in flip and finds the utmost in a area surrounding it. The filtered pixel is then set to that native most. This has the impact of increasing every native peak to its surrounding space.

Operating a most filter on Like a Stone’s spectrogram offers the next outcome:

The utmost-filtered spectrogram seems to be like a lower-resolution model of the unique spectrogram. It’s because the peaks within the sign have expanded and brought over the opposite pixels. Every field with the identical colour within the filtered picture corresponds to an area peak within the authentic picture.

The utmost filter has a parameter that controls the scale of the field to make use of when discovering the native maxima. Whenever you set this parameter to make a smaller field, you find yourself getting extra peaks. Equally, by setting this parameter bigger you get fewer peaks.

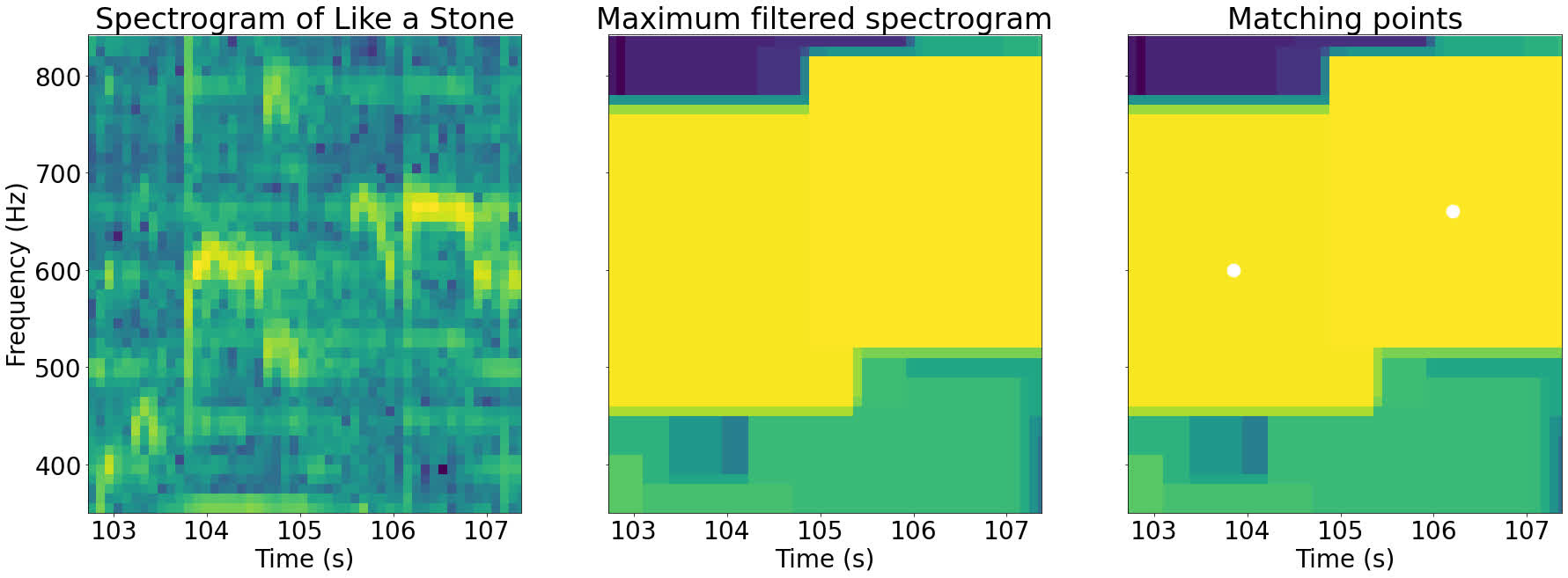

Step 2: Get well authentic peaks

The utmost filter does not do all of the work for us. The filter has emphasised the native peaks, but it surely hasn’t discovered their areas. To search out the height areas, we have to discover the factors which have equal values within the authentic spectrogram and the filtered spectrogram.

The thought behind this trick is that every one the non-peak factors within the spectrogram have been changed by their native peaks, so their values have modified. The one factors whose values have not modified are the peaks.

Beneath is a zoomed in part of the spectrogram above. The factors the place the values are equal within the filtered and authentic spectrograms are highlighted:

As you may see within the pictures above, the highlighted factors the place the 2 spectrograms are equal correspond to the native peaks of that a part of the picture.

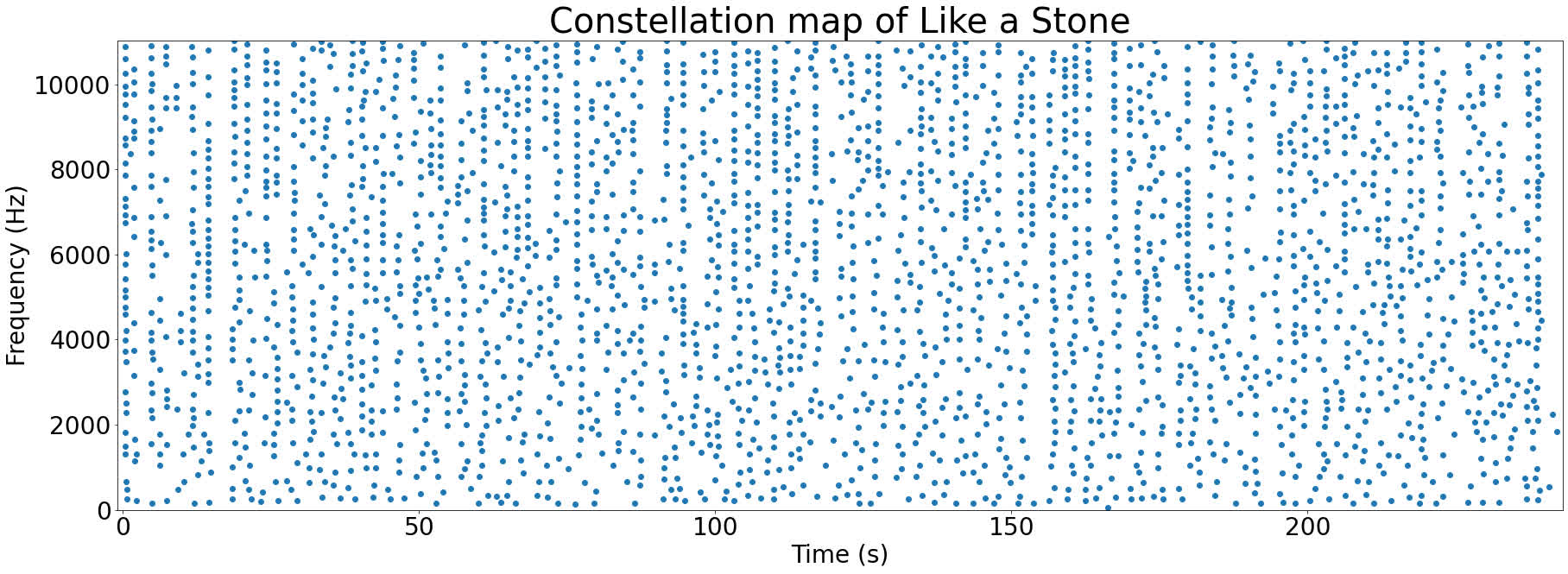

Plotting all the peaks collectively produces one thing known as a constellation map. Here is the constellation map for Like a Stone:

These graphs are known as constellation maps since they appear a bit like a picture of the evening sky. Who stated laptop science could not be romantic?

Step 3: (Non-obligatory) Discard peaks

As soon as we now have a constellation map of peaks, the following step is to doubtlessly discard some. The dimensions of our fingerprint depends on the variety of peaks that we use in it. Retaining fingerprints small issues if you end up storing thousands and thousands of songs in your database.

Nonetheless, by decreasing the variety of peaks we use, we scale back the accuracy of our system. Fewer peaks in a fingerprint imply fewer probabilities to match a pattern to the right tune.

There are a few choices for decreasing the variety of peaks in our fingerprint:

- Take the highest N peaks. N must be proportional to the size of audio that you’re fingerprinting to keep away from over-representing shorter songs.

- Take all peaks above a sure threshold. This does not assure you a sure fingerprint dimension per time like the opposite methodology, however might give extra correct outcomes.

We’ve nearly completed setting up our fingerprint, the following step is to provide a set of hashes from our peaks.

Hashing

To inspire hashing, think about that our fingerprint was only a assortment of spectrogram peaks. Every peak’s frequency can be represented by a sure variety of bits, for instance 10. With 10 bits of data, we are able to symbolize 2^10=1024 particular person frequencies. With hundreds of those factors per monitor, we rapidly get numerous repeats (see the abracadabra implementation).

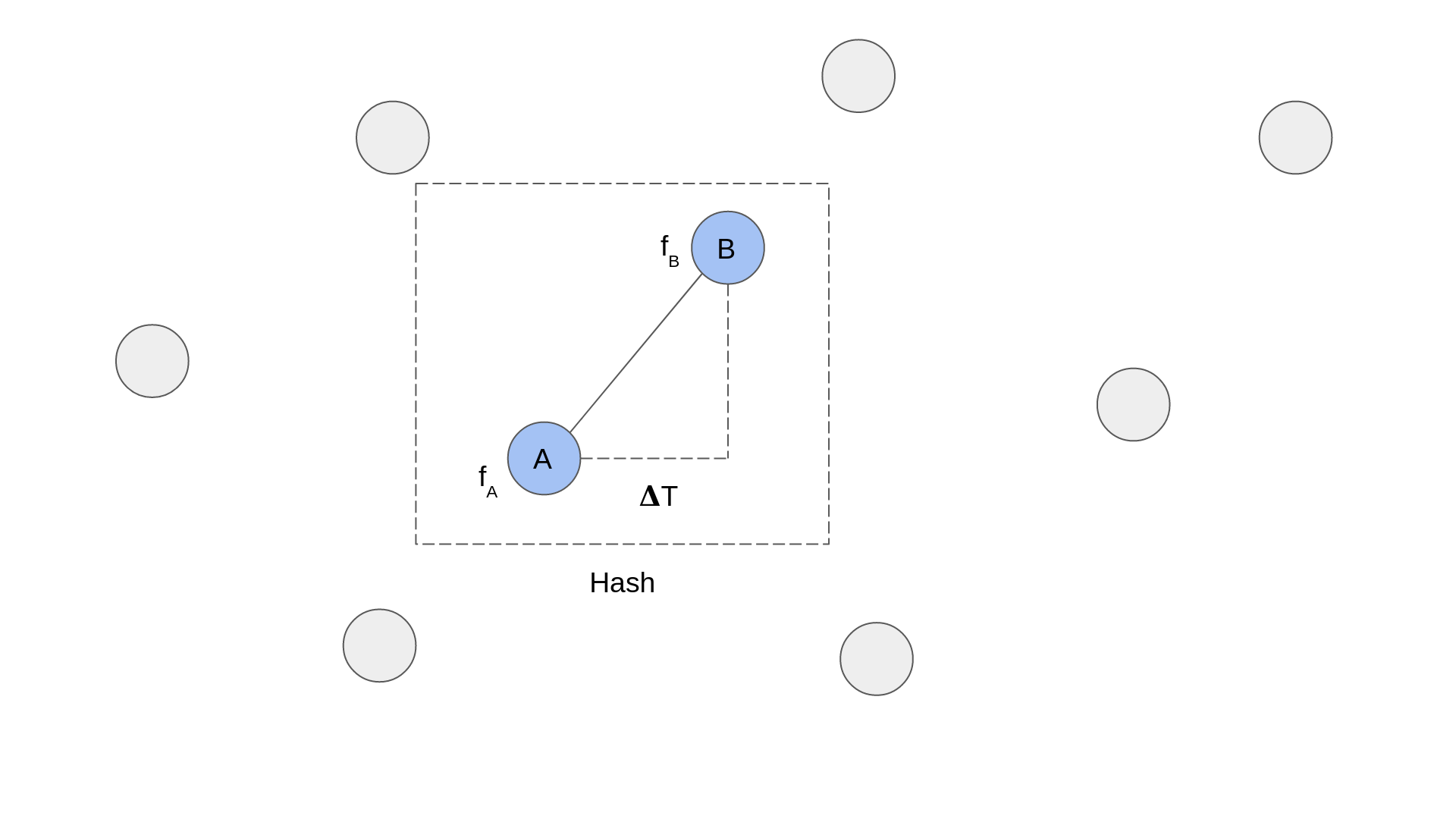

Uniqueness is vital for a fingerprint, because it makes looking so much quicker and helps to acknowledge extra songs. Shazam’s resolution to the issue of uniqueness is to create hashes from pairs of peaks:

The diagram above reveals a zoomed in portion of a spectrogram. Every circle represents a peak and the dashed line field represents a hash. You may see {that a} hash is fashioned of two peaks. The data that’s recorded for every hash is the frequency of every peak, fA and fB, and the time delta between them, ΔT.

The benefit of pairing factors up is that two paired factors are rather more distinctive than a single level. it mathematically, if every level has 10 bits of frequency data, and the time delta between the 2 factors may very well be represented by 10 bits, then you’ve got 30 bits of data. 2^30=1073741824 which is considerably bigger than 1024 prospects for a single level.

Shazam creates pairs utilizing the next algorithm:

- Choose some extent. This might be known as the anchor level.

- Calculate a goal zone of the spectrogram for the anchor level.

- For each level within the goal zone, create a pair with the anchor level.

You may see this algorithm illustrated within the following animation:

Selecting a goal zone is not described within the Shazam paper, however the pictures the paper incorporates present it as beginning barely forward of time of the anchor level and being centered on the anchor level’s frequency.

As soon as a pair has been created, it’s saved as a hash within the database with the next data:

| Different data | ||||

| Level A freq (fA) | Level B freq (fB) | Time delta (ΔT) | Level A time | Monitor ID |

The primary three columns (fA, fB and ΔT) make up the hash. The “Different data” is used to find the hash at a selected time in a tune. This might be utilized in matching later.

All the hashes for a specific monitor make up the fingerprint. Within the subsequent part, you will examine how Shazam matches these fingerprints.

Matching

Given a set of fingerprints in a database, how does Shazam determine which one a given audio pattern matches? That is the place the matching a part of the system is available in.

Recall the system diagram from earlier:

The acknowledge and register flows each generate fingerprints. The distinction lies in what they do with them. Whereas the register circulation shops fingerprints away for future matching, the acknowledge circulation has to match its fingerprint with what’s already within the database.

The matching algorithm incorporates the next steps:

- Retrieve all hashes from the database that match the pattern’s fingerprint.

- Group these hashes by tune.

- For every tune, determine if the hashes line up.

- Select the monitor with essentially the most lined up hashes.

We’ll take a look at every of those steps in flip.

Step 1: Retrieve matching hashes

Step one is to seek out each hash within the database that matches a hash within the fingerprint we simply created (abracadabra implementation). Despite the fact that a hash is a 3-tuple of (fA, fB, ΔT), abracadabra shops this as hash(fA, fB, ΔT) the place hash() is a hash function that returns a single worth.

This manner you solely must seek for a single worth per hash as a substitute of three.

Step 2: Group hashes by tune

Recall the format of a person hash within the database:

| Different data | ||||

| Level A freq (fA) | Level B freq (fB) | Time delta (ΔT) | Level A time | Monitor ID |

Because of the monitor ID that we related to every hash, we are able to group the hashes by monitor. This enables us to attain every doubtlessly matching monitor.

Step 3: Determine if hashes line up

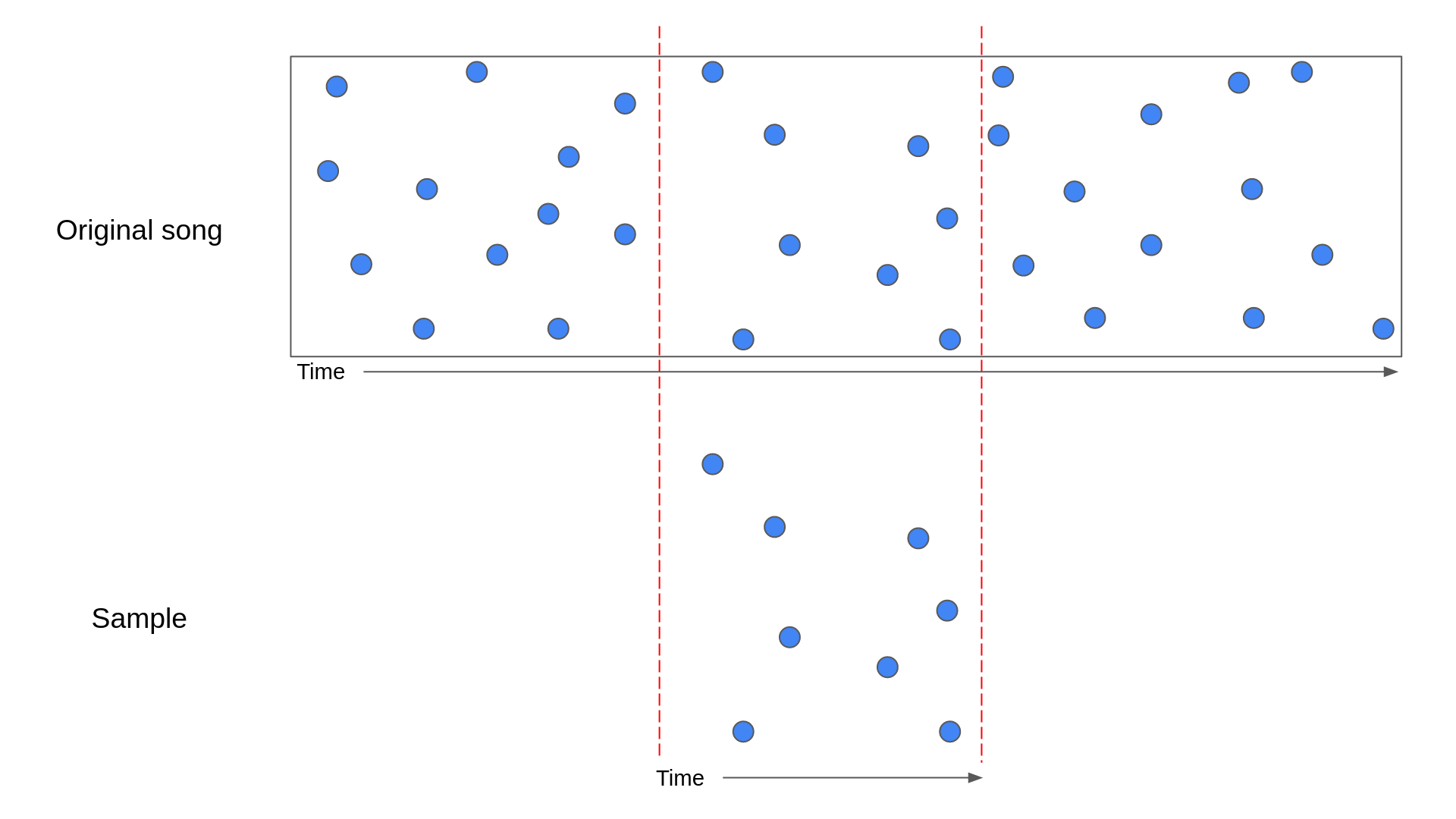

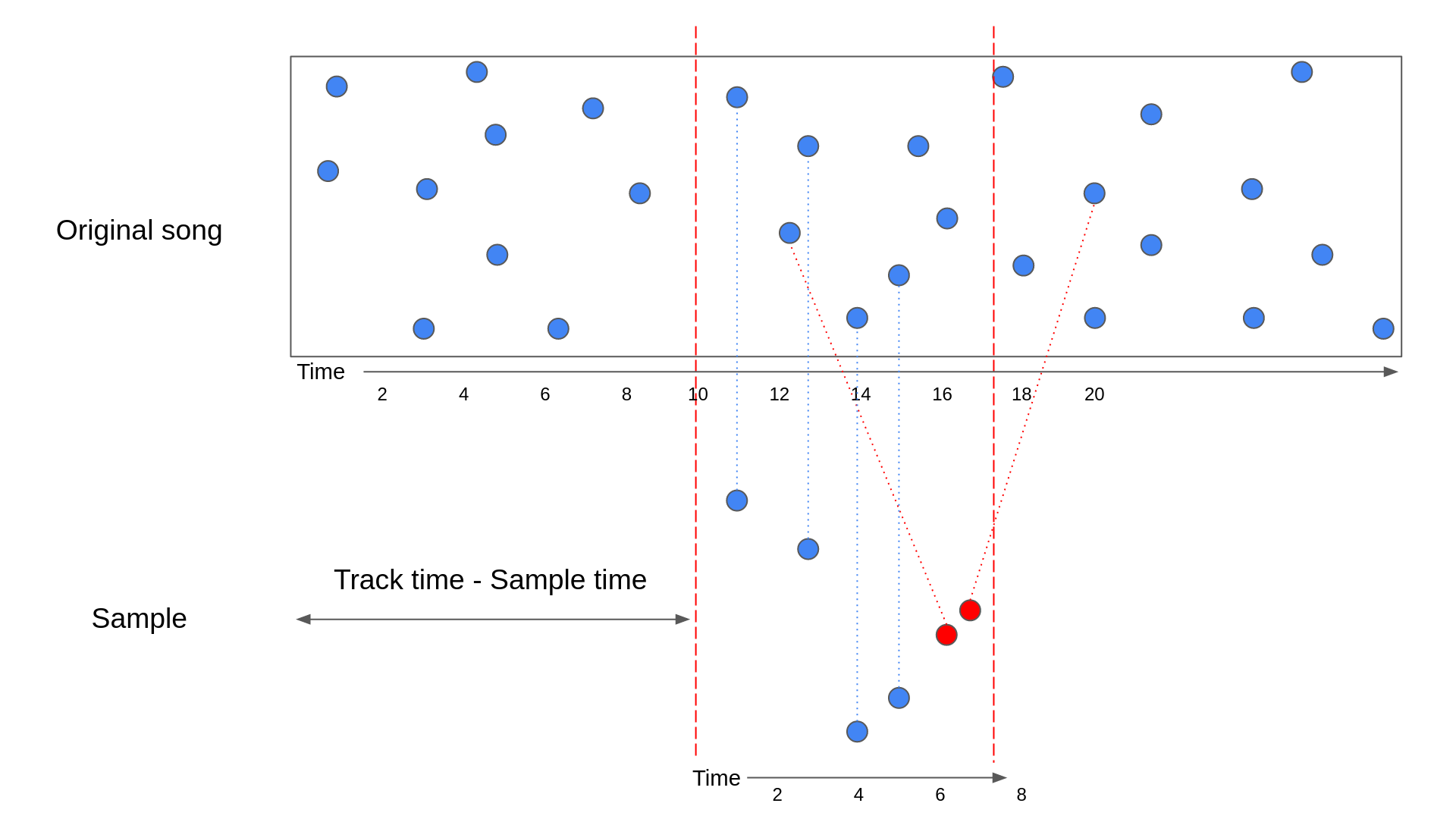

If a pattern matches a tune, then the hashes current in that pattern ought to line up properly with the hashes in some part of that tune. The diagram under illustrates what this might appear to be:

Within the above diagram, a pattern has been lined up with the part of the unique tune that it got here from. The blue factors symbolize the anchor factors of the hashes.

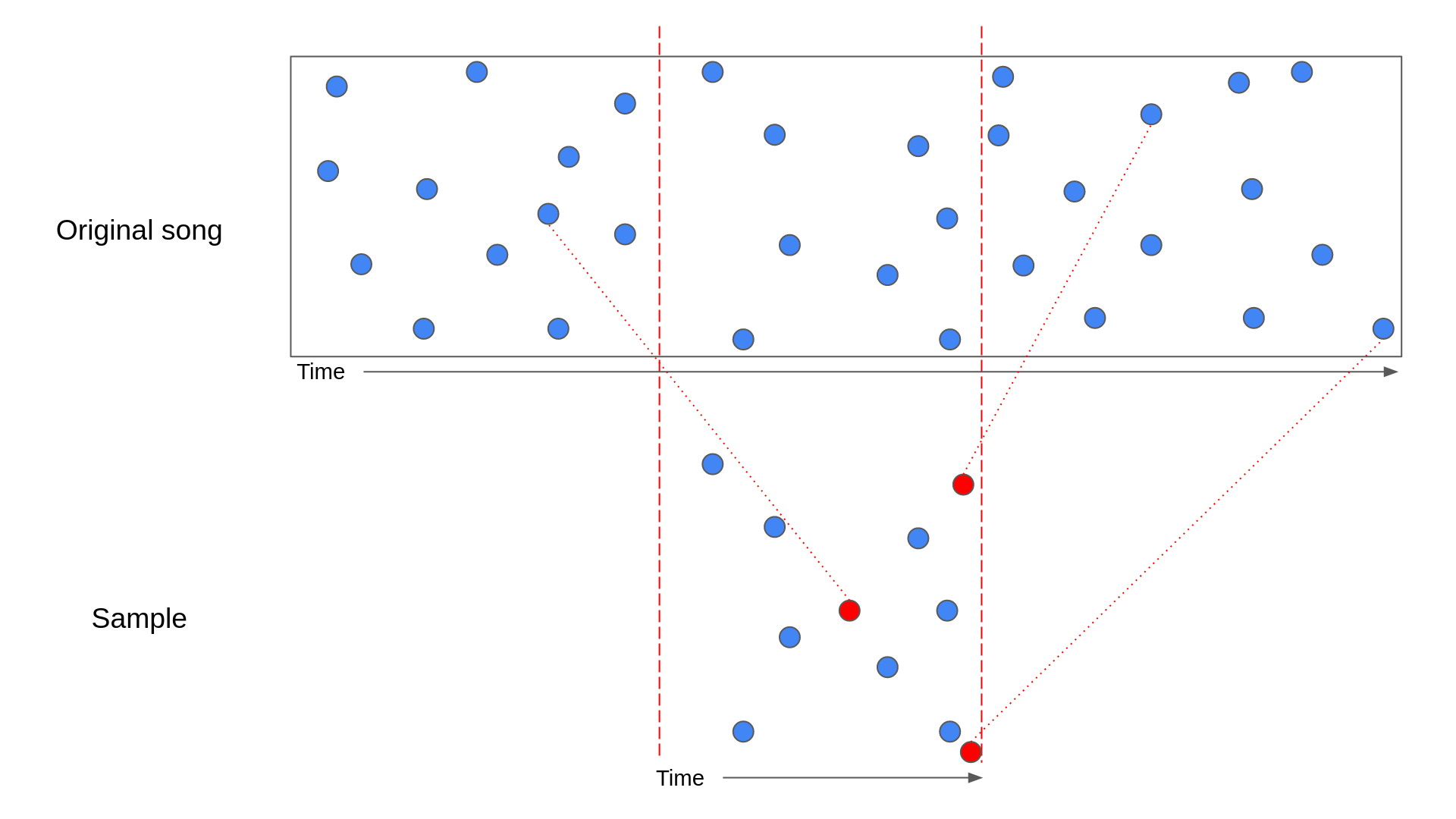

Whereas the above diagram reveals the right situation, there’s a probability that the matching hashes from the database do not line up completely. For instance, noise may have launched peaks within the pattern that resemble peaks at a special level within the tune. This will result in the next situation:

Within the above diagram, the pink circles symbolize hashes that match to factors within the tune outdoors the part the pattern got here from. On this scenario, it is more durable to see that the pattern is an ideal match for the tune.

What’s worse, generally hashes can match to the mistaken tune! That is the place checking that the hashes lineup is available in.

To elucidate how we are able to test whether or not the hashes line up in code, let us take a look at an instance. We could say that we have got a listing of matching hashes from the database and grouped them by monitor. For a given monitor, we are able to then test the time that the hash happens within the authentic monitor in opposition to the time that the hash happens within the pattern.

| Pattern time | Monitor time | Monitor time – Pattern time |

| 3 | 13 | 10 |

| 4 | 14 | 10 |

| 7 | 20 | 13 |

| 5 | 15 | 10 |

| 6 | 12 | 6 |

| 1 | 11 | 10 |

Within the above desk, you may see that every one the matches with a Monitor time – Pattern time of 10 have been highlighted. These are the true matches, whereas the opposite two rows are false matches. To see that is the case, let us take a look at an identical diagram to those we noticed earlier than:

The above diagram incorporates the identical hashes from the earlier desk. As you may see, the true matches have a Monitor time – Pattern time that is the same as how far into the monitor time that the pattern begins.

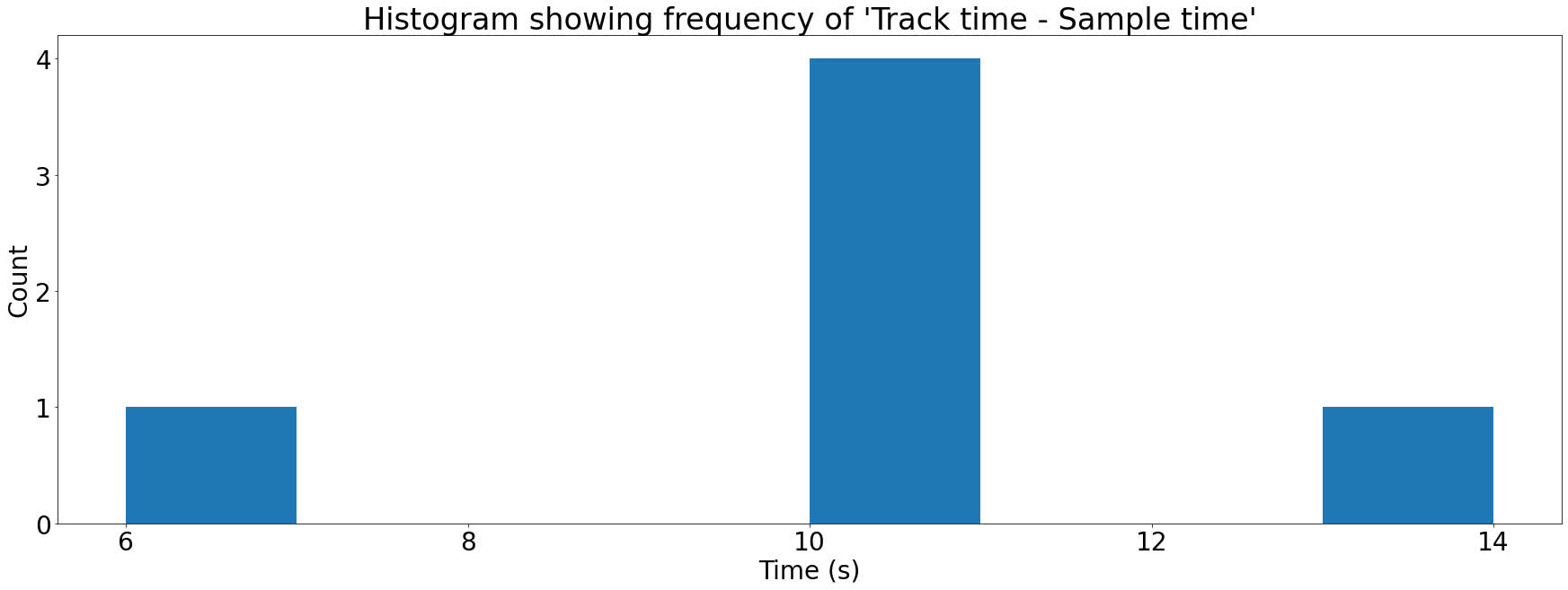

To see how we flip this right into a rating for the monitor, let’s make this information right into a histogram. A histogram is a elaborate title for a bar chart. We will plot every Monitor time – Pattern time in opposition to the variety of occasions it happens:

Every bar within the histogram above is known as a bin. To attain a tune on how good a match it’s for an audio pattern, we simply must take the biggest bin. Songs that are not good matches may have low values in all bins, whereas a tune that is match may have a big spike in one of many bins.

This manner we are able to examine a pattern to all of the songs with matching hashes in our database and rating every of them. The tune with the best rating is more likely to be the right outcome.

You may marvel why we do not simply go for the tune that matches the biggest variety of hashes as it could be a lot easier to implement. The issue with this method is that not all songs are the identical size. Longer songs are more likely to get extra matches than shorter songs and when some Spotify tracks are over 4 hours long this will actually bias your outcomes.

Conclusion

Effectively executed for making it this far, that was an extended journey! Over the course of this text, you have realized how Shazam extracts fingerprints from audio, and the way it matches these fingerprints to people who it has already registered in its database.

To summarize, Shazam does the next to register a tune:

- Calculates a spectrogram of a tune

- Extracts peaks from that spectrogram

- Pairs these peaks up into hashes

- Shops the gathering of hashes for a tune as a fingerprint

Shazam does the next to acknowledge an audio pattern:

- Calculates a fingerprint of the audio pattern

- Finds the hashes that match that fingerprint within the database

- For every potential tune match:

- Calculate Monitor time – Pattern time for every matching hash

- Group these values right into a histogram

- Take the biggest bin on this histogram because the rating for the tune

- Return the tune with the best rating

Enter abracadabra

I realized all the pieces written right here over the method of writing abracadabra, my implementation of this paper. In case you are concerned with seeing what this may appear to be in code, please have a look!

All the things is open supply and I’ve executed my finest to doc the venture. abracadabra can be used as a library in different initiatives, so please be at liberty to remix and construct one thing cool. In the event you do use it, I might like to hear about it.

Additional studying

If you wish to discover out extra about something talked about on this article, have a look under. I’ve additionally scattered some useful hyperlinks all through the web page.