Server shipments for 2023 are down however income is rising

[ad_1]

The massive image: The server enterprise is predicted to achieve new income heights within the subsequent few years, and this progress will not be solely attributed to AI workload acceleration. In keeping with market analysis firm Omdia, highly effective GPU co-processors are spearheading the shift towards a completely heterogeneous computing mannequin.

Omdia anticipates a decline of as much as 20 % in yearly shipments of server models by the tip of 2023, regardless of anticipated revenues to develop by six to eight %. The corporate’s current Cloud and Knowledge Middle Market Replace report illustrates a reshaping of the info middle market pushed by the demand for AI servers. This, in flip, is fostering a broader transition to the hyper-heterogeneous computing mannequin.

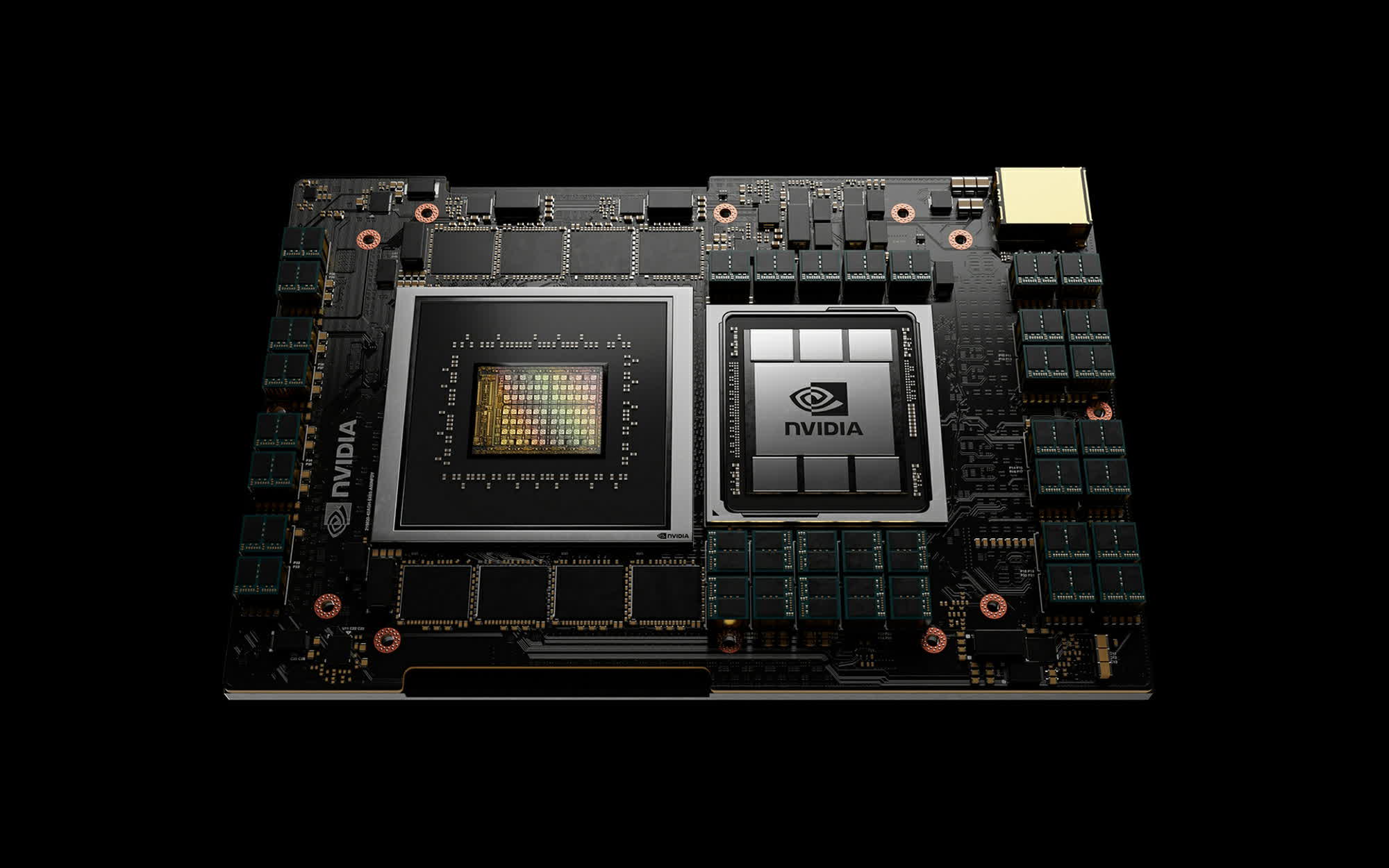

Omdia has coined the time period “hyper heterogeneous computing” to explain a server configuration geared up with co-processors particularly designed to optimize varied workloads, whether or not for AI mannequin coaching or different specialised functions. In keeping with Omdia, Nvidia’s DGX mannequin, that includes eight H100 or A100 GPUs, has emerged as the most well-liked AI server to this point and is especially efficient for coaching chatbot fashions.

Along with Nvidia’s choices, Omdia highlights Amazon’s Inferentia 2 fashions as standard AI accelerators. These servers are geared up with custom-built co-processors designed for accelerating AI inferencing workloads. Different co-processors contributing to the hyper-heterogeneous computing development embody Google’s Video Coding Items (VCUs) for video transcoding and Meta’s video processing servers, which leverage the corporate’s Scalable Video Processors.

On this new hyper-heterogeneous computing state of affairs, producers are growing the variety of expensive silicon parts put in of their server fashions. In keeping with Omdia’s forecast, CPUs and specialised co-processors will represent 30 % of information middle spending by 2027, up from lower than 20 % within the earlier decade.

At the moment, media processing and AI take the highlight in most hyper-heterogeneous servers. Nevertheless, Omdia anticipates that different ancillary workloads, akin to databases and net servers, could have their very own co-processors sooner or later. Stable-state drives with computational storage parts may be considered as an early type of in-hardware acceleration for I/O workloads.

Based mostly on Omdia’s information, Microsoft and Meta presently lead amongst hyperscalers within the deployment of server GPUs for AI acceleration. Each firms are anticipated to obtain 150,000 Nvidia H100 GPUs by the tip of 2023, a amount 3 times bigger than what Google, Amazon, or Oracle are deploying.

The demand for AI acceleration servers from cloud firms is so excessive that unique tools producers like Dell, Lenovo, and HPE are going through delays of 36 to 52 weeks in acquiring enough H100 GPUs from Nvidia to satisfy their shoppers’ orders. Omdia notes that highly effective information facilities geared up with next-gen coprocessors are additionally driving elevated demand for energy and cooling infrastructure.

[ad_2]

Source