Amazon’s AWS GenAI technique comes with an enormous Q

[ad_1]

Amazon Net Companies unveiled a complete three-layer GenAI technique and choices at re:Invent 2023 that occurred to function the intriguing new Q digital assistant on the high of the stack. And whereas Q received many of the consideration, there have been numerous interconnected parts beneath it.

At every of the three layers – Infrastructure, Platform/Instruments and Purposes – AWS debuted a mix of recent choices and enhancements to present merchandise that tie collectively to kind an entire answer within the red-hot area of GenAI. Or, a minimum of, that is what they had been imagined to do. Nonetheless, the amount of bulletins in a area that’s not broadly understood led to appreciable confusion about what precisely the corporate had assembled. A fast skimming of the information from re:Invent reveals divergent protection, indicating that AWS nonetheless must make clear its choices.

Given the posh of a day or two to consider it, in addition to the chance to ask loads of questions, it is obvious to me now that Amazon’s new method to GenAI is a complete and compelling technique – even in its admittedly early days. It’s also evident that AWS’ endeavors over the previous few years have concerned introducing a spread of services and products that initially look won’t have appeared associated, however they had been the constructing blocks for a bigger technique that’s now starting to emerge.

The corporate’s newest efforts begin at its core infrastructure layer. At this yr’s re:Invent, AWS debuted the second era Trainium AI accelerator chip, which provides 4x enhancements in AI mannequin coaching workloads over its predecessor. In addition they mentioned their Inferentia 2 chip, which is optimized for AI inferencing efforts. Collectively, these two chips – along with the fourth-gen Graviton CPU – give Amazon an entire line of distinctive processors that it will possibly use to construct differentiated compute choices.

AWS CEO Adam Selipsky additionally had Nvidia CEO Jensen Huang be a part of him onstage to announce additional partnerships between the businesses. They mentioned the debut of Nvidia’s newest GH200 GPU in a number of new EC2 compute occasion from AWS, and the primary third-party deployment of Nvidia’s DGX Cloud techniques. The truth is, the 2 even mentioned a brand new model of Nvidia’s NVLink chip interconnect know-how that permits as much as 32 of those techniques to perform collectively as a large AI computing manufacturing facility (codenamed Challenge Ceiba) that AWS will host for Nvidia’s personal AI improvement functions.

Shifting on to Platform and Instruments, AWS introduced vital enhancements to its Bedrock platform. Bedrock consists of a set of companies that assist you to do every part from selecting the inspiration mannequin of selection, determining the way you select to coach or fine-tune a mannequin, decide ranges of entry that totally different individuals in a corporation have entry to, selecting what sorts of data is allowed and what’s blocked (Bedrock Guardrails), and create actions primarily based on what the mannequin generates.

Within the space of mannequin tuning, AWS introduced assist for effective tuning, steady pre-training and most critically, RAG (Retrieval Augmented Era). All three of those have burst onto the scene comparatively lately and are being actively explored by organizations to combine their very own customized knowledge into GenAI purposes. These new approaches are necessary as a result of many firms have began to comprehend they don’t seem to be fascinated by (or, frankly, able to) constructing their very own basis fashions from scratch.

On the inspiration mannequin facet of issues, the vary of recent choices supported inside Bedrock embrace Meta’s Llama 2, Steady Diffusion, and extra variations of Amazon’s circle of relatives of Titan fashions. Given AWS’ latest funding in Anthropic AI, it wasn’t a shock to see a specific deal with Anthropic’s new Claude 2.1 mannequin as nicely.

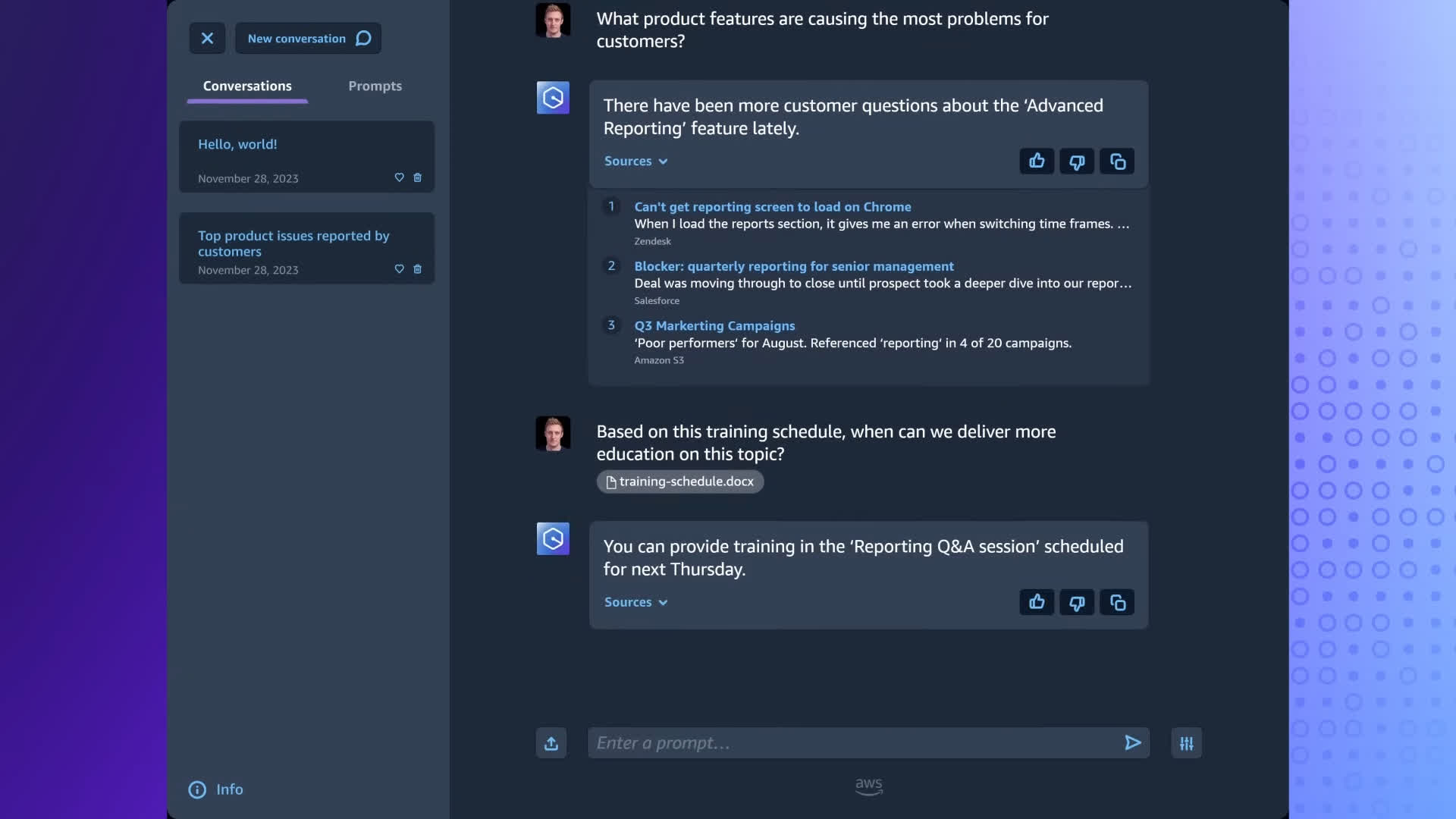

The ultimate layer of the AWS GenAI story is the Q digital assistant. In contrast to most of AWS’ choices, Q can be utilized as a high-level completed GenAI software that firms can begin to deploy. Builders can customise Q for particular purposes by way of APIs and different instruments within the Bedrock layer.

What’s attention-grabbing about Q is that may take many types. The obvious model is a chatbot-style expertise just like what different firms presently supply. Not surprisingly, many of the early information tales targeted on this chatbot UI.

However even on this early iteration, Q can supply quite a lot of functionalities. For instance, AWS confirmed how Q may improve the code-generating expertise in Amazon’s Code Whisperer, act as a name transcriber and summarizer for the Amazon Join customer support platform, simplify the creation of information dashboards in Amazon QuickSight analytics, and function a content material generator and information administration information for enterprise customers. Q can make the most of totally different underlying basis fashions for numerous purposes, which represents a extra in depth and succesful kind of digital assistant software than these provided by some rivals, nevertheless it’s additionally so much more durable for individuals to get their heads round.

Digging deeper into how Q works and its connections to the opposite elements of AWS, it seems that Q was constructed by way of a set of Bedrock Brokers. So, what this implies is that firms who’re searching for a extra “straightforward button” answer for getting GenAI purposes deployed of their firm can use Q as is.

Firms who’re fascinated by doing extra custom-made options, alternatively, can create a few of their very own Bedrock Brokers. This idea of pre-built versus customizable capabilities additionally applies to Bedrock and Amazon’s SageMaker device for constructing customized AI fashions. Bedrock is for individuals who wish to leverage a spread of already constructed basis fashions, whereas SageMaker is for who wish to construct fashions of their very own.

Taking a step again, you’ll be able to start to understand the excellent framework and imaginative and prescient AWS has assembled. Nonetheless, it is also clear that this technique is just not probably the most intuitive to know. Wanting forward, it is essential that Amazon refines its messaging to make their GenAI narrative extra accessible and comprehensible to a broader viewers. This may allow extra firms to leverage the complete vary of capabilities which can be presently obscured inside the framework.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a know-how consulting agency that gives strategic consulting and market analysis companies to the know-how business {and professional} monetary neighborhood. You possibly can observe him on X @bobodtech

[ad_2]

Source