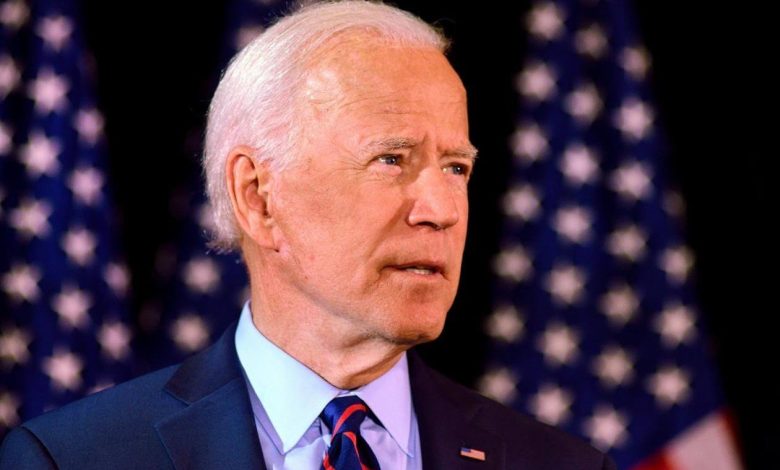

Advocacy teams urge Biden to deal with generative AI’s environmental impression and misinformation dangers

[ad_1]

Briefly: A number of advocacy teams have written an open letter to President Biden and his administration highlighting their considerations over the dearth of motion in the case of the large quantity of power utilized by generative AI instruments and their means to create environment-related misinformation.

Again in October, the Biden administration tried to deal with the tough challenge of regulating synthetic intelligence improvement with an executive order signed by the president that promised to handle the expertise’s dangers. The order covers many areas, together with AI’s impression on jobs, privateness, safety, and extra. It additionally mentions utilizing AI to enhance the nationwide grid and overview environmental research to assist fight local weather change.

Seventeen teams, together with Greenpeace USA, the Tech Oversight Challenge, Associates of the Earth, Amazon Staff for Local weather Justice, and the Digital Privateness Data Middle (EPIC), wrote in an open letter to Biden that the manager order doesn’t do sufficient to deal with the expertise’s environmental impression, each actually and thru misinformation.

“… on account of their huge power necessities and the carbon footprint related to their progress and proliferation, the widespread use of LLMs can enhance carbon emissions,” the letter states.

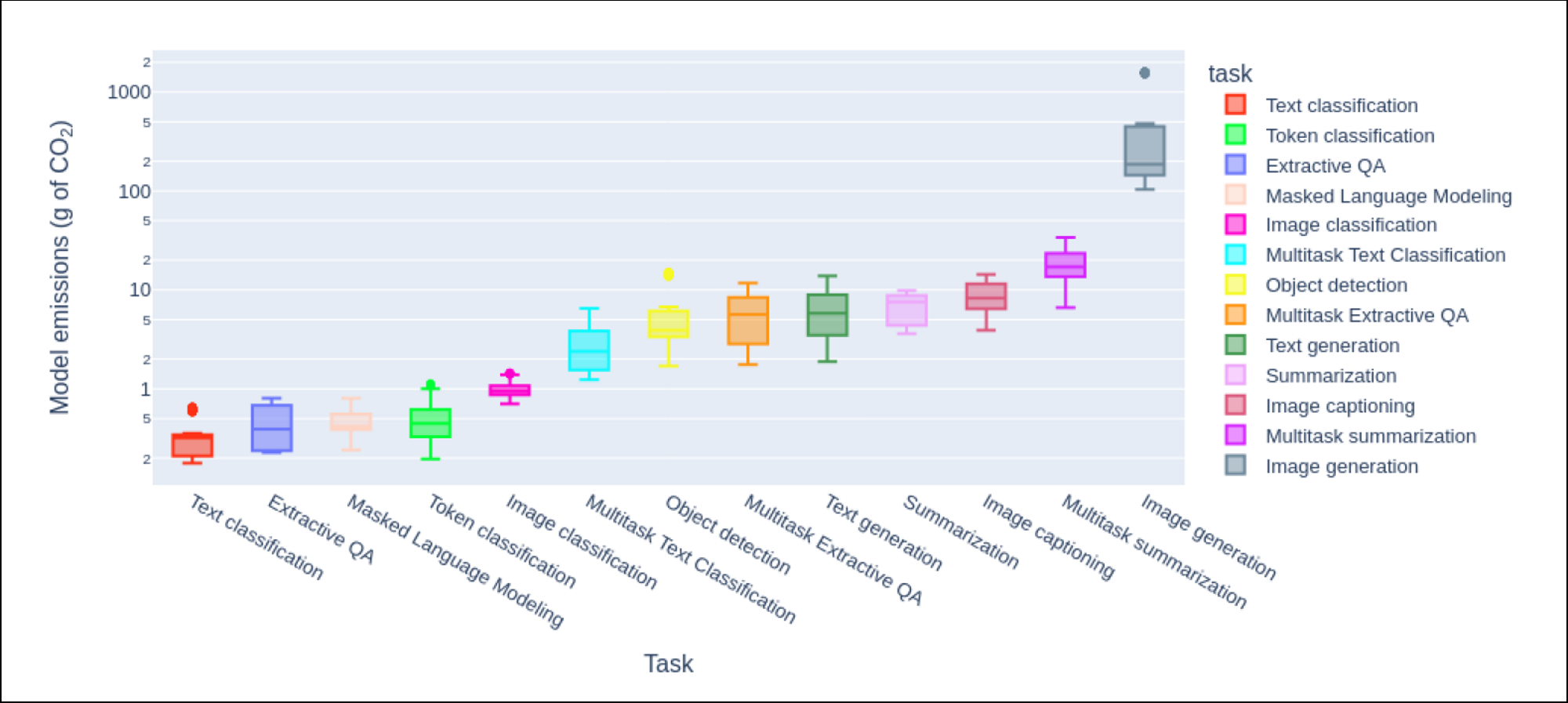

A just lately printed study from AI startup Hugging Face and Carnegie Mellon College highlighted the large quantity of power utilized by generative AI fashions, each throughout their coaching part and of their frequent each day functions. Utilizing the likes of Secure Diffusion XL to generate 1,000 pictures causes the identical quantity of carbon emissions as driving a median gas-powered automobile 4.1 miles.

“Unfounded hype from Silicon Valley says that AI can save the planet someday sooner or later however analysis reveals the alternative is definitely occurring proper now,” the teams wrote.

Along with considerations about AI’s impression on the planet, the teams additionally expressed considerations about its potential use to unfold local weather disinformation.

“Generative AI threatens to amplify the forms of local weather disinformation which have plagued the social media period and slowed efforts to combat local weather change.” they wrote. “Researchers have been capable of simply bypass ChatGPT’s safeguards to supply an article from the attitude of a local weather change denier that argued international temperatures are literally reducing.”

We have already seen generative AI getting used to create disinformation in regards to the upcoming US election and in a number of different domains, the place the first problem usually lies in convincing people that the generated content material just isn’t actual.

The teams need the federal government to require firms to publicly report on power use and emissions produced by the total life cycle of AI fashions, together with coaching, updating and search queries. In addition they request a research into the impact AI methods can have on local weather disinformation and the implementation of safeguards towards its manufacturing. Lastly, there is a request for firms to elucidate to most people how generative AI fashions produce data, measure local weather change accuracy, and sources of proof for claims they make.

[ad_2]

Source