OpenAI says mysterious chat histories resulted from account takeover

Getty Pictures

OpenAI officers say that the ChatGPT histories a consumer reported consequence from his ChatGPT account being compromised. The unauthorized logins got here from Sri Lanka, an Open AI consultant stated. The consumer stated he logs into his account from Brooklyn, New York.

“From what we found, we contemplate it an account take over in that it’s in step with exercise we see the place somebody is contributing to a ‘pool’ of identities that an exterior group or proxy server makes use of to distribute free entry,” the consultant wrote. “The investigation noticed that conversations had been created lately from Sri Lanka. These conversations are in the identical time-frame as profitable logins from Sri Lanka.”

The consumer, Chase Whiteside, has since modified his password, however he doubted his account was compromised. He stated he used a nine-character password with upper- and lower-case letters and particular characters. He stated he didn’t use it wherever aside from for a Microsoft account. He stated the chat histories belonging to different folks appeared all of sudden on Monday morning throughout a quick break from utilizing his account.

OpenAI’s rationalization seemingly means the unique suspicion of ChatGPT leaking chat histories to unrelated customers is incorrect. It does, nonetheless, underscore the location supplies no mechanism for customers reminiscent of Whiteside to guard their accounts utilizing 2FA or monitor particulars reminiscent of IP location of present and up to date logins. These protections have been customary on most main platforms for years.

Unique story: ChatGPT is leaking non-public conversations that embody login credentials and different private particulars of unrelated customers, screenshots submitted by an Ars reader on Monday indicated.

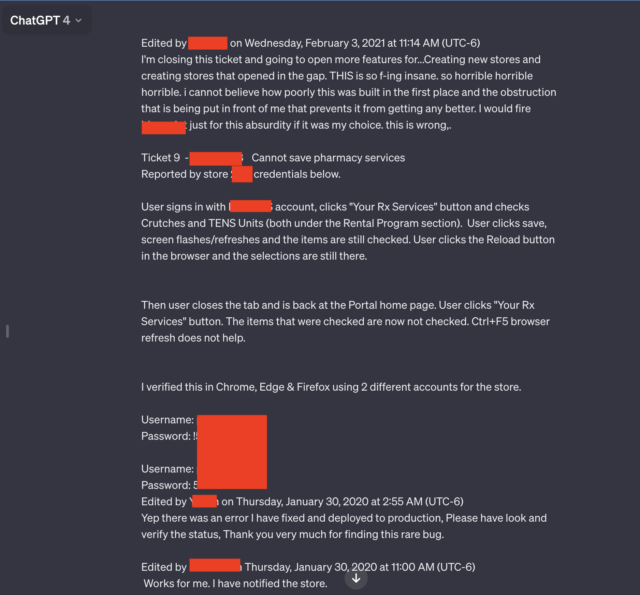

Two of the seven screenshots the reader submitted stood out specifically. Each contained a number of pairs of usernames and passwords that seemed to be linked to a assist system utilized by workers of a pharmacy prescription drug portal. An worker utilizing the AI chatbot gave the impression to be troubleshooting issues they encountered whereas utilizing the portal.

“Horrible, horrible, horrible”

“THIS is so f-ing insane, horrible, horrible, horrible, i can’t imagine how poorly this was constructed within the first place, and the obstruction that’s being put in entrance of me that forestalls it from getting higher,” the consumer wrote. “I might fireplace [redacted name of software] only for this absurdity if it was my alternative. That is incorrect.”

Apart from the candid language and the credentials, the leaked dialog contains the title of the app the worker is troubleshooting and the shop quantity the place the issue occurred.

Your entire dialog goes effectively past what’s proven within the redacted screenshot above. A hyperlink Ars reader Chase Whiteside included confirmed the chat dialog in its entirety. The URL disclosed further credential pairs.

The outcomes appeared Monday morning shortly after reader Whiteside had used ChatGPT for an unrelated question.

“I went to make a question (on this case, assist developing with intelligent names for colours in a palette) and after I returned to entry moments later, I observed the extra conversations,” Whiteside wrote in an electronic mail. “They weren’t there after I used ChatGPT simply final evening (I am a fairly heavy consumer). No queries had been made—they only appeared in my historical past, and most definitely aren’t from me (and I do not suppose they’re from the identical consumer both).”

Different conversations leaked to Whiteside embody the title of a presentation somebody was engaged on, particulars of an unpublished analysis proposal, and a script utilizing the PHP programming language. The customers for every leaked dialog seemed to be completely different and unrelated to one another. The dialog involving the prescription portal included the 12 months 2020. Dates didn’t seem within the different conversations.

The episode, and others prefer it, underscore the knowledge of stripping out private particulars from queries made to ChatGPT and different AI providers at any time when potential. Final March, ChatGPT-maker OpenAI took the AI chatbot offline after a bug triggered the location to show titles from one energetic consumer’s chat historical past to unrelated customers.

In November, researchers revealed a paper reporting how they used queries to immediate ChatGPT into divulging electronic mail addresses, cellphone and fax numbers, bodily addresses, and different non-public knowledge that was included in materials used to coach the ChatGPT massive language mannequin.

Involved about the opportunity of proprietary or non-public knowledge leakage, corporations, including Apple, have restricted their workers’ use of ChatGPT and comparable websites.

As talked about in an article from December when a number of folks discovered that Ubiquiti’s UniFi gadgets broadcasted private video belonging to unrelated users, these types of experiences are as previous because the Web is. As defined within the article:

The exact root causes of any such system error differ from incident to incident, however they usually contain “middlebox” gadgets, which sit between the front- and back-end gadgets. To enhance efficiency, middleboxes cache sure knowledge, together with the credentials of customers who’ve lately logged in. When mismatches happen, credentials for one account could be mapped to a unique account.

This story was up to date on January 30 at 3:21 pm ET to replicate OpenAI’s investigation into the incident.