Generative AI for creating animations

Apple revealed analysis final week that particulars MGIE, a generative AI photograph editor that permits you to make adjustments to a picture utilizing textual content prompts. That’s the type of function I’d anticipate to see in iOS 18, alongside extra standard generative AI photograph enhancing instruments. , like interacting with a picture utilizing your arms. You can already test Apple MGIE with out putting in something in your laptop.

If that wasn’t sufficient to show that Apple is severe about including generative AI to its working methods, there’s extra proof that was simply uncovered. Apple launched a brand new examine that particulars a unique piece of generative AI analysis.

Apple Keyframer is a generative AI instrument that permits you to edit photographs through prompts. However, not like MGIE, Keyframer enables you to create animations out of a nonetheless picture utilizing textual content instructions.

Keyframer sounds extra complicated than MGIE. And, like MGIE, there’s no assure you’ll see Keyframer on the iPhone as soon as iOS 18 is launched. However I have already got an concept about what Keyframer might be used for: Animated stickers in iMessage.

You possibly can create your individual stickers from any picture. Simply faucet and maintain on a photograph to seize the half you need to rework right into a sticker. However what if you happen to may inform Apple AI to create animations from a picture, after which reserve it as an animated sticker? I’m solely speculating right here, after all. And this is able to be the only use of Keyframer I can consider as I’m writing these traces of textual content.

The examine

Apple’s study, titled “Keyframer: Empowering Animation Design using Large Language Models,” presents extra superior use circumstances for such generative AI powers.

Apple has truly examined Keyframer with skilled animation designers and engineers. The corporate sees the AI product as a doubtlessly great tool that professionals may use whereas engaged on new animations.

Somewhat than spending hours to reiterate an idea concept, they may simply create it with the assistance of a static picture and textual content prompts. They might refine the prompts to get a outcome nearer to what they need to obtain. After utilizing AI to prototype concepts, they may then get to work. Or they may simply use animations that Keyframer places out.

That’s to say that Apple may need to add Keyframer to particular photograph and video enhancing software program sooner or later that extends past the iPhone or iOS 18.

How Apple Keyframer works

Keyframer works pretty merely, the place by merely I imply it’s utilizing a big language mannequin like ChatGPT. Apple truly relied on OpenAI’s GPT-4 for Keyframer moderately than a proprietary Apple answer.

Rumors say that Apple is working by itself language fashions, and I’d anticipate a business model of Keyframer to make use of that moderately than something from the competitors.

Again to Keyframer, the person merely needed to add an SVG picture and kind a textual content immediate. This suggests that the massive language mannequin has to assist multimodal enter. And explains why Apple would have gone to GPT-4 to check the instrument. Additionally, the truth that Apple examined Keyframer with non-Apple testers explains why Apple selected a publicly accessible AI product.

As soon as that’s completed, the AI would generate the code that makes the animation occur. Customers can edit the precise code or present extra prompts to edit the outcome.

Sadly, the examine doesn’t supply animation examples, however it contains photographs that try and element the method of utilizing LLMs to generate and enhance animations through Keyframer. One such instance is discovered above.

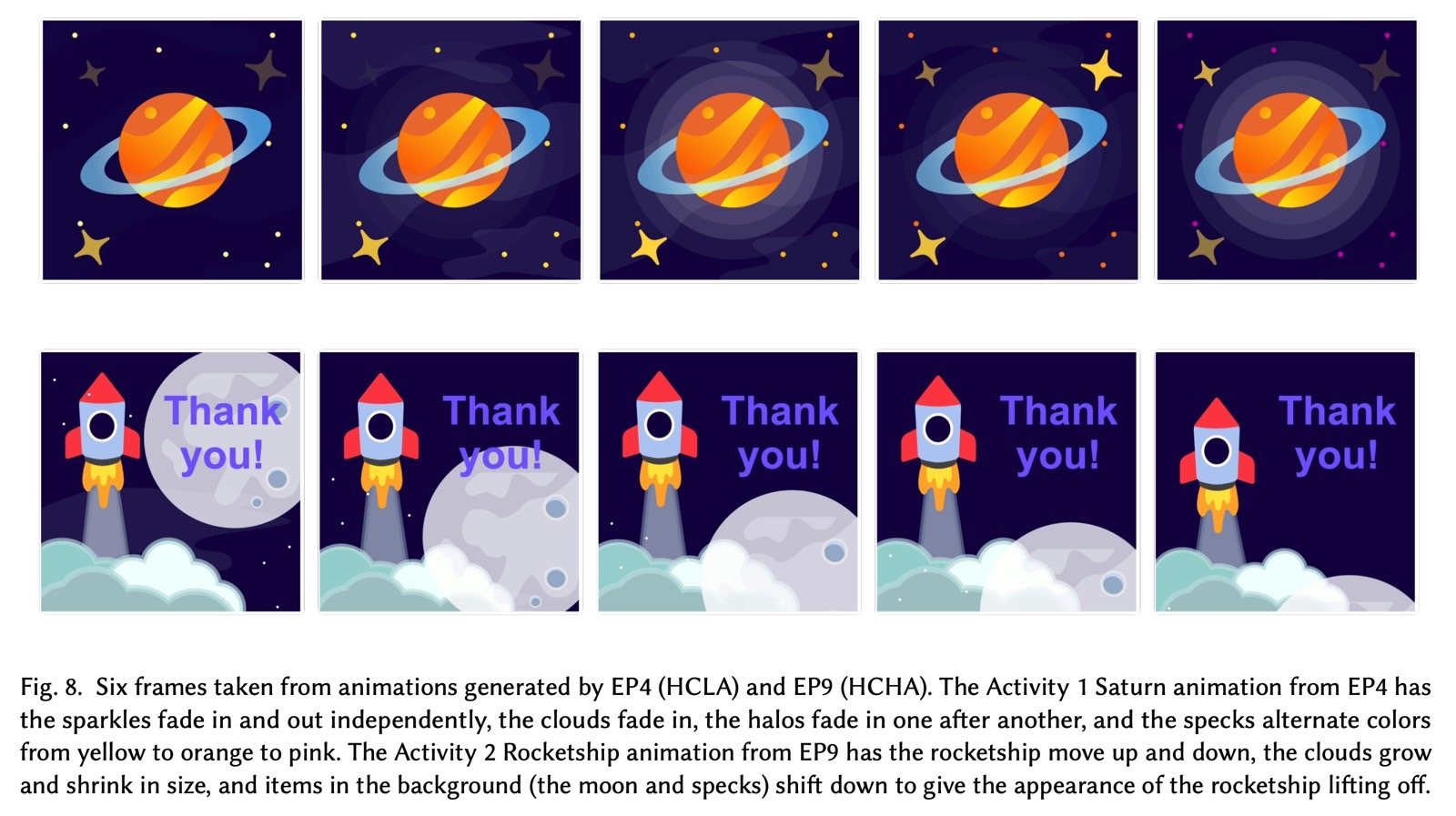

One other instance follows beneath that comprises descriptions of Keyframer animations from Apple’s take a look at topics. Once more, precise animations would have been significantly better, however it is a printed examine, which may’t function any animated content material.

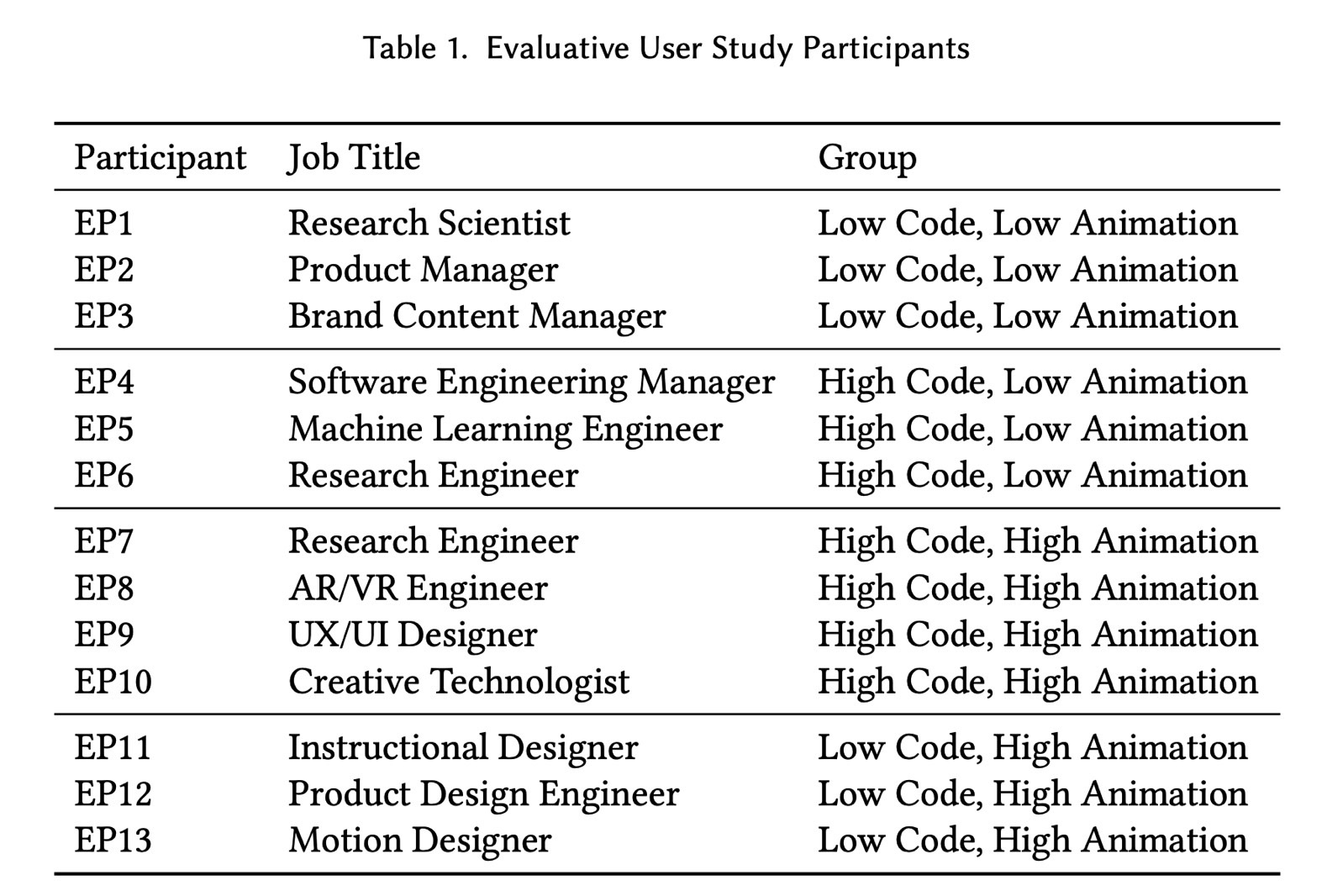

As for these EP4 and EP9 topics Apple mentions, they’re two of the 13 testers Apple had chosen. As you may see later within the put up Apple checked out various kinds of testers with varied backgrounds in code era and animation. All of them needed to edit the identical two photographs.

Will we see it in iOS 18?

No matter their background, the members appreciated the Keyframer expertise. “I feel this was a lot sooner than loads of issues I’ve completed… I feel doing one thing like this earlier than would have simply taken hours to do,” EP2 mentioned, a participant from the primary group mentioned. Even probably the most expert coder and animator favored the expertise.

“A part of me is type of apprehensive about these instruments changing jobs, as a result of the potential is so excessive,” EP13 mentioned. “However I feel studying about them and utilizing them as an animator — it’s simply one other instrument in our toolbox. It’s solely going to enhance our abilities. It’s actually thrilling stuff.

The examine particulars what number of traces of code the AI produced to ship the animation and the way the testers labored. Whether or not they refined the outcomes and labored on the completely different animations on the similar time or individually. It additionally seems to be on the precise prompts from the varied people, and the way particular or imprecise they’re.

Apple concluded that LLMs like Keyframer can assist creators prototype concepts at varied phases of the design course of and iterate on them with the assistance of pure language prompts, together with imprecise instructions like “make it look cool.”

The researchers additionally discovered that “Keyframer customers discovered worth in sudden LLM output that helped spur their creativity. A method we noticed this serendipity was with customers testing out Keyframer’s function to request design variants.”

“Via this work, we hope to encourage future animation design instruments that mix the highly effective generative capabilities of LLMs to expedite design prototyping with dynamic editors that allow creators to keep up inventive management in refining and iterating on their designs,” Apple concludes.

Not like MGIE, you may’t take a look at Keyframer out within the wild but. And it’s unclear whether or not Keyframer is superior sufficient to make it into any Apple working methods or software program within the close to future. Once more, this analysis used GPT-4. Apple would wish to interchange it with its personal language fashions.