Microsoft points warning on China’s use of generative AI to disrupt US elections

[ad_1]

A sizzling potato: For the second time in simply over six months, Microsoft has issued a warning about China’s use of generative AI to sow disruption in the US throughout this election yr. State-sponsored teams, working with the backing of North Korea, are additionally anticipated to focus on elections in South Korea and India having launched the same marketing campaign throughout Taiwan’s presidential election in January.

Microsoft’s report, titled East Asia risk actors make use of distinctive strategies, notes that Chinese language campaigns have continued to refine AI-generated or AI-enhanced content material, creating movies, memes, and audio, amongst others. Whereas their affect won’t be too impactful proper now, it might show more and more efficient down the road because it turns into extra subtle, Microsoft stated.

Again in September, analysts from the Microsoft Risk Evaluation Middle highlighted a marketing campaign by Chinese language operatives that used generative AI to create content material (see instance under) for social media posts specializing in politically divisive subjects, together with gun violence and denigrating US political figures and symbols.

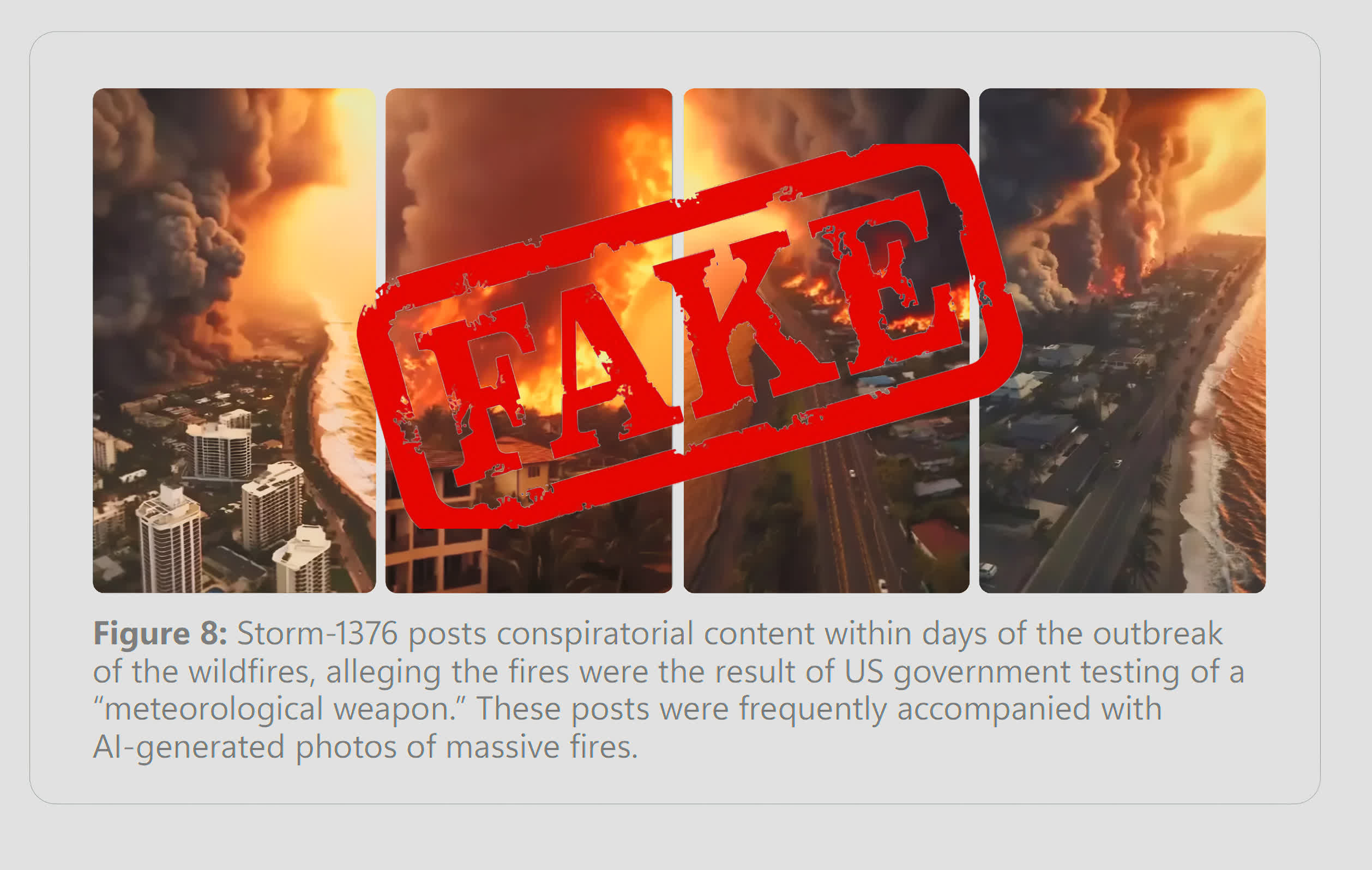

The newest report examines a number of the conspiratorial content material created utilizing generative AI pushed by Chinese language social media accounts. This features a declare from Beijing-backed group Storm 1376 in August 2023 that the US authorities had intentionally began the Hawaii wildfires utilizing a military-grade climate weapon. The identical group revealed conspiracy theories regarding the Kentucky rail derailment in 2023 and tried to stoke discord in Japan and South Korea.

Storm 1376 was extremely energetic in the course of the Taiwanese elections in January, which is being referred to by Microsoft as a “dry run” for the upcoming elections in different nations. It posted pretend audio of Foxconn founder Terry Gou, who had dropped out of the race in November, endorsing one other candidate. The group additionally posted a number of AI-generated memes about eventual winner William Lai, who’s pro-sovereignty. There was additionally an elevated use of AI-generated information anchors, created by TikTok proprietor ByteDance’s CapCut software, by which the pretend presenters made unsubstantiated claims about Lai’s personal life, together with that he fathered illegitimate kids.

One of many greatest issues with preventing lifelike generative AI getting used to unfold misinformation is that many individuals refuse to believe it is pretend, particularly when this content material conforms to their beliefs and values.

[ad_2]

Source