Exponential progress brews 1 million AI fashions on Hugging Face

[ad_1]

On Thursday, AI internet hosting platform Hugging Face surpassed 1 million AI mannequin listings for the primary time, marking a milestone within the quickly increasing area of machine studying. An AI mannequin is a pc program (typically utilizing a neural community) educated on knowledge to carry out particular duties or make predictions. The platform, which began as a chatbot app in 2016 earlier than pivoting to develop into an open supply hub for AI fashions in 2020, now hosts a big selection of instruments for builders and researchers.

The machine-learning area represents a far greater world than simply giant language fashions (LLMs) like the type that energy ChatGPT. In a put up on X, Hugging Face CEO Clément Delangue wrote about how his firm hosts many high-profile AI fashions, like “Llama, Gemma, Phi, Flux, Mistral, Starcoder, Qwen, Steady diffusion, Grok, Whisper, Olmo, Command, Zephyr, OpenELM, Jamba, Yi,” but additionally “999,984 others.”

The rationale why, Delangue says, stems from customization. “Opposite to the ‘1 mannequin to rule all of them’ fallacy,” he wrote, “smaller specialised personalized optimized fashions on your use-case, your area, your language, your {hardware} and customarily your constraints are higher. As a matter of truth, one thing that few folks understand is that there are virtually as many fashions on Hugging Face which are non-public solely to at least one group – for firms to construct AI privately, particularly for his or her use-cases.”

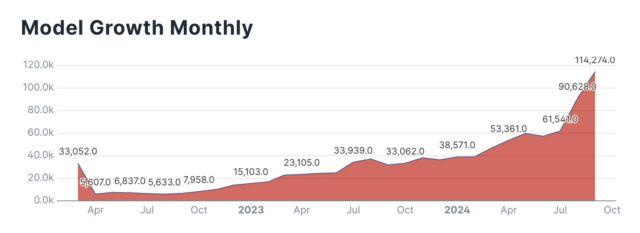

Hugging Face’s transformation into a serious AI platform follows the accelerating tempo of AI analysis and improvement throughout the tech business. In only a few years, the variety of fashions hosted on the location has grown dramatically together with curiosity within the area. On X, Hugging Face product engineer Caleb Fahlgren posted a chart of fashions created every month on the platform (and a link to different charts), saying, “Fashions are going exponential month over month and September is not even over but.”

The ability of fine-tuning

As hinted by Delangue above, the sheer variety of fashions on the platform stems from the collaborative nature of the platform and the apply of fine-tuning present fashions for particular duties. Wonderful-tuning means taking an present mannequin and giving it extra coaching so as to add new ideas to its neural community and alter the way it produces outputs. Builders and researchers from all over the world contribute their outcomes, resulting in a big ecosystem.

For instance, the platform hosts many variations of Meta’s open-weights Llama models that signify completely different fine-tuned variations of the unique base fashions, every optimized for particular functions.

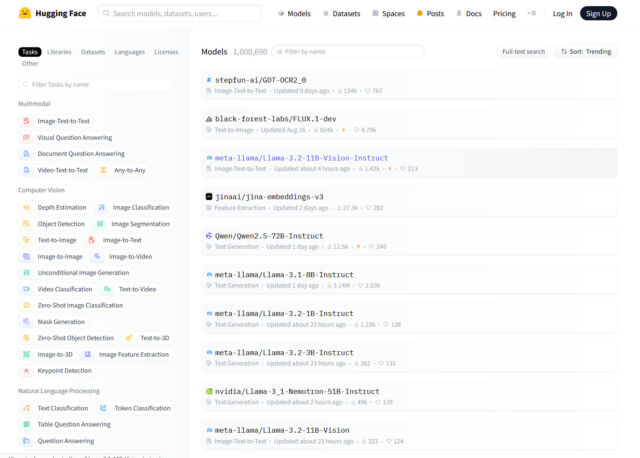

Hugging Face’s repository contains fashions for a variety of duties. Looking its models page reveals classes equivalent to image-to-text, visible query answering, and doc query answering below the “Multimodal” part. Within the “Pc Imaginative and prescient” class, there are sub-categories for depth estimation, object detection, and picture era, amongst others. Pure language processing duties like textual content classification and query answering are additionally represented, together with audio, tabular, and reinforcement studying (RL) fashions.

Hugging Face

When sorted for “most downloads,” the Hugging Face fashions record reveals developments about which AI fashions folks discover most helpful. On the high with an enormous lead at 163 million downloads is Audio Spectrogram Transformer from MIT, which classifies audio content material like speech, music, environmental sounds. Following that with 54.2 million downloads is BERT from Google, an AI language mannequin that learns to know English by predicting masked phrases and sentence relationships, enabling it to help with numerous language duties.

Rounding out the highest 5 AI fashions are all-MiniLM-L6-v2 (which maps sentences and paragraphs to 384-dimensional dense vector representations, helpful for semantic search), Vision Transformer (which processes pictures as sequences of patches to carry out picture classification), and OpenAI’s CLIP (which connects pictures and textual content, permitting it to categorise or describe visible content material utilizing pure language).

It doesn’t matter what the mannequin or the duty, the platform simply retains rising. “As we speak a brand new repository (mannequin, dataset or area) is created each 10 seconds on HF,” wrote Delangue. “In the end, there’s going to be as many fashions as code repositories and we’ll be right here for it!”

[ad_2]

Source