Wrestling with AI and the AIpocalypse we ought to be fearful about

[ad_1]

Editor’s take: Like virtually everybody in tech right this moment, we’ve got spent the previous 12 months making an attempt to wrap our heads round “AI”. What it’s, the way it works, and what it means for the trade. We aren’t positive that we’ve got any good solutions, however a couple of issues have been clear. Perhaps AGI (synthetic normal intelligence) will emerge, or we’ll see another main AI breakthrough, however focusing an excessive amount of on these dangers could possibly be overlooking the very actual – but additionally very mundane – enhancements that transformer networks are already delivering.

A part of the issue in penning this piece is that we’re caught in one thing of a dilemma. On the one hand, we don’t need to dismiss the advances of AI. These new programs are vital technical achievements, they aren’t toys solely fitted to producing footage of cute kittens dressed within the type of Dutch masters considering a plate of fruit as within the image proven under (generated by Microsoft Copilot). They shouldn’t be simply dismissed.

Editor’s Word:

Visitor creator Jonathan Goldberg is the founding father of D2D Advisory, a multi-functional consulting agency. Jonathan has developed development methods and alliances for corporations within the cell, networking, gaming, and software program industries.

However, the overwhelming majority of the general public commentary about AI is nonsense. Nobody truly doing work within the area right this moment who we’ve got spoken with thinks we’re on the cusp of Synthetic Normal Intelligence (AGI). Perhaps we’re only one breakthrough away, however we can not discover anybody who actually believes that’s probably. Regardless of this, the overall media is full of every kind of tales that conflate generative AI and AGI, with each type of wild, unbased opinions on what this implies.

Setting apart all of the noise, and there’s a lot of noise, what we’ve got seen over the previous 12 months has been the rise of Transformer-based neural networks. We’ve got been utilizing probabilistic programs in compute for years, and transformers are a greater, or extra economical methodology, for performing that compute.

That is vital as a result of it opens up the issue house that we will deal with with our computer systems. Thus far this has largely fallen within the realm of pure language processing and picture manipulation. These are vital, generally even helpful, however they apply to what’s nonetheless a reasonably small piece of person expertise and functions. Computer systems that may effectively course of human language might be very helpful, however doesn’t equate to some type of common compute breakthrough.

This doesn’t imply that “AI” solely gives a small quantity of worth, however it does imply that a lot of that worth will are available in methods which might be pretty mundane. We expect this worth ought to be damaged into two buckets – generative AI experiences and low-level enhancements in software program.

Take the latter – enhancements in software program. This sounds boring – it’s – however that doesn’t imply it’s unimportant. Each main software program and Web firm right this moment is bringing transformers into their stacks. For probably the most half, this may go completely unnoticed by customers.

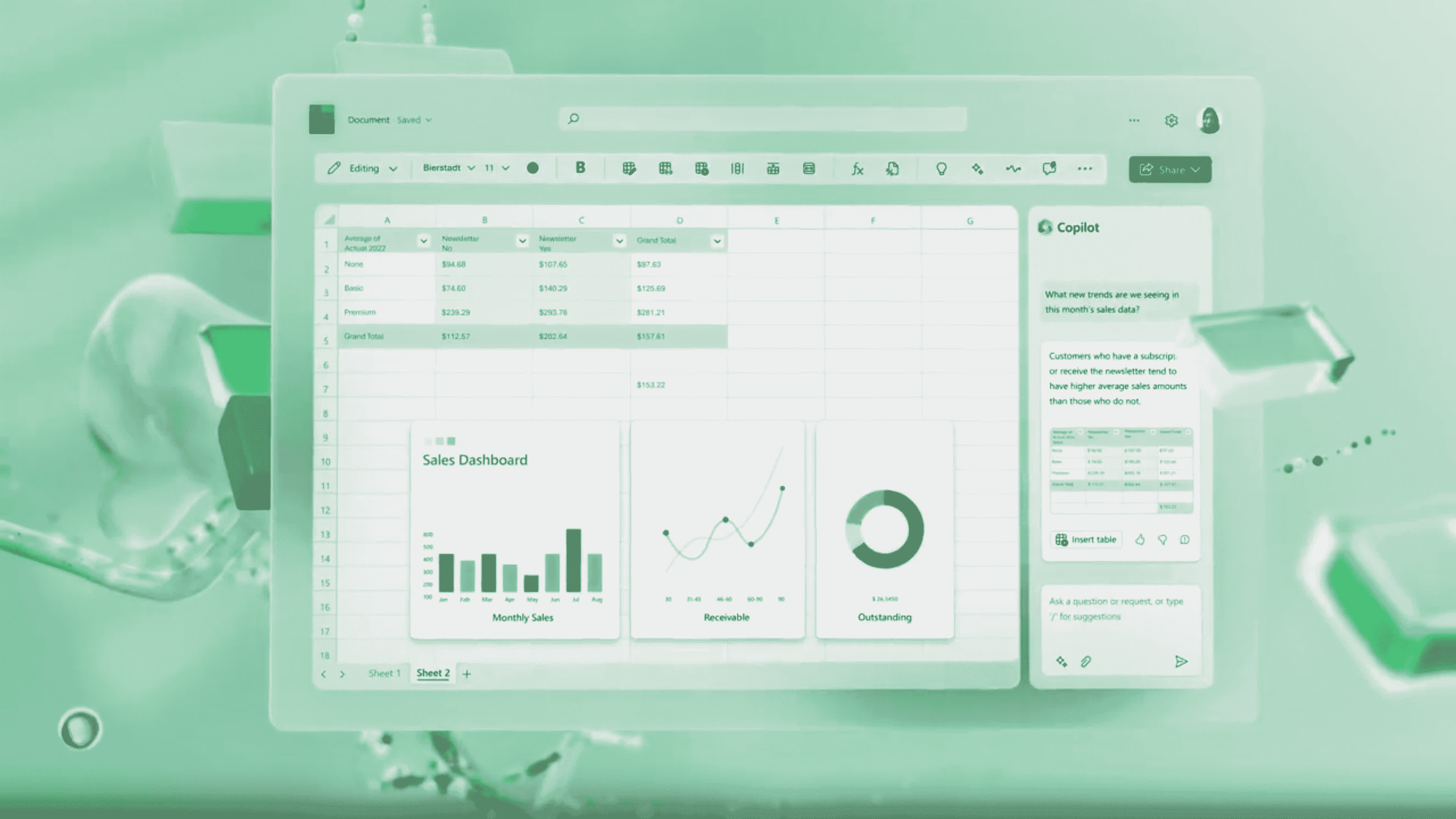

We think about Microsoft might have some actually cool options so as to add to MS Phrase, PowerPoint and Visible Primary. Positive, go forward and impress us with AI Excel. However that’s numerous hope for an organization that isn’t well-known for delivering nice person interfaces.

Safety corporations could make their merchandise slightly bit higher at detecting threats. CRM programs might get slightly higher at matching person requests to helpful outcomes. Chip companies will enhance processor department prediction by some quantity. All of those are tiny good points, 10% or 20% boosts in efficiency, or reductions in value. And that’s okay, that’s nonetheless great worth when compounded throughout all of the software program on the market. For the second, we predict the huge bulk of “AI” good points will are available in these unremarkable however helpful types.

Generative AI might become extra vital. Perhaps. A part of the issue we’ve got right this moment with this area is that a lot of the tech trade is ready to see what everybody else will do on this entrance.

In all their current public commentary, each main processor firm has pointed to Microsoft’s upcoming AI replace as a serious catalyst for adoption of AI semis. We think about Microsoft might have some actually cool options so as to add to MS Phrase, PowerPoint and Visible Primary. Positive, go forward and impress us with AI Excel. However that’s numerous hope to hold onto to a single firm, particularly an organization like Microsoft that isn’t well-known for delivering nice person interfaces.

For his or her half, Google appears to be a deer within the headlights with regards to transformers, ironic on condition that they invented them. When it comes all the way down to it, everybody is actually ready for Apple to point out us all methods to do it proper. Thus far, they’ve been noticeably quiet about generative AI. Perhaps they’re as confused as everybody else, or perhaps they simply don’t see the utility but.

Apple has had neural processors of their telephones for years. They have been very fast so as to add transformer assist to M Collection CPUs. It doesn’t appear proper to say they’re falling behind in AI, when perhaps they’re simply laying in wait.

Taking this again to semiconductors, it could be tempting to construct huge expectations and elaborate situations of all of the methods wherein AI will drive new enterprise. Therefore the rising quantity of commentary about AI PCs and the marketplace for inference semiconductors. We aren’t satisfied, it is not clear any of those companies will actually be capable to construct huge markets in these areas.

As an alternative, we are likely to see the appearance of transformer-based AI programs in a lot easier phrases. The rise of transformers largely appears to imply a switch of affect and value-capture to Nvidia on the expense of Intel within the knowledge middle. AMD can carve out its share of this switch, and perhaps Intel can stage the comeback-of-all-comebacks, however for the foreseeable future there is no such thing as a must complicate issues.

That mentioned, perhaps we’re getting this all flawed. Perhaps there are huge good points simply hovering on the market, some main breakthrough from a analysis lab or deca-unicorn pre-product startup. We is not going to eradicate that chance. Our level right here is simply that we’re already seeing significant good points from transformers and different AI programs. All these “underneath the fold” enhancements in software program are already vital, and we should always not agonize over ready for emergence of one thing even larger.

Some would argue that AI is a fad, the subsequent bubble ready to burst. We’re extra upbeat than that, however it’s value pondering by way of what the draw back case for AI semis would possibly appear like…

We’re pretty optimistic concerning the prospects for AI, albeit in some decidedly mundane locations. However we’re nonetheless in early days of this transition, with many unknowns. We’re conscious that there’s a pressure of pondering amongst some traders that we’re in an “AI bubble”, and the laborious type of that thesis holds that AI is only a passing fad, and as soon as the bubble deflates the semis market will revert to the established order of two years in the past.

Someplace between the extremes of AI is so highly effective it’s going to finish the human race and AI is a ineffective toy sits a way more gentle draw back case for semiconductors.

So far as we will gauge proper now, the consensus appears to carry that marketplace for AI semis might be modestly additive to total demand. Firms will nonetheless must spend billions on CPUs and conventional compute, however now must AI capabilities necessitating the acquisition of GPUs and accelerators.

On the coronary heart of this case is the marketplace for inference semis. As AI fashions percolate into widespread utilization, the majority of AI demand will fall on this space, truly making AI helpful to customers. There are a couple of variations inside this case. Some CPU demand will disappear within the transition to AI, however not a big stake. And traders can debate how a lot of inference might be run within the cloud versus the sting, and who can pay for that capex. However that is basically the bottom case. Good for Nvidia, with plenty of inference market left over for everybody else in a rising market.

The draw back case actually is available in two types. The primary facilities on the dimensions of that inference market. As we’ve got talked about a couple of occasions, it isn’t clear how a lot demand there’s going to be for inference semis. Essentially the most obvious downside is on the edge. As a lot as customers right this moment appear taken with generative AI, keen to pay $20+/month for entry to OpenAI’s newest, the case for having that generative AI performed on gadget shouldn’t be clear.

Individuals can pay for OpenAI, however will they actually pay one other additional greenback to run it on their gadget quite than the cloud? How will they even be capable to inform the distinction. Admittedly, there are legit the explanation why enterprises wouldn’t need to share their knowledge and fashions with third events, which might require on gadget inference. However, this looks as if an issue solved by a bunch of legal professionals and a tightly worded License Settlement, which is definitely far more reasonably priced than build up a bunch of GPU server racks (in case you might even discover any to purchase).

All of which matches to say that corporations like AMD, Intel and Qualcomm, constructing huge expectations for on-device AI are going to battle to cost a premium for his or her AI-ready processors. On their newest earnings name, Qualcomm’s CEO framed the case for AI-ready Snapdragon as offering a optimistic uplift for combine shift, which is a well mannered means of claiming restricted worth will increase for a small subset of merchandise.

The marketplace for cloud inference ought to be significantly better, however even right here there are questions as to the dimensions of the market. What if fashions shrink sufficient that they are often run pretty properly on CPUs? That is technically potential, the desire for GPUs and accelerators is at coronary heart an financial case, however change a couple of variables and for a lot of use instances CPU inference might be adequate for a lot of workloads. This could be catastrophic, or no less than very dangerous, to expectations for all of the processor makers.

Most likely the scariest state of affairs is one wherein generative AI fades as a client product. Helpful for programming and authoring catchy spam emails, however little else. That is the true bear case for Nvidia, not some nominal share good points by AMD, however a scarcity of compelling use instances. This is the reason we get nervous on the extent to which all of the processor makers appear so depending on Microsoft’s upcoming Home windows refresh to spark client curiosity within the class.

In the end, we predict the marketplace for AI semis will proceed to develop, driving wholesome demand throughout the trade. Most likely not as a lot as some hope, however removed from the worst-case, “AI is a fad” camp.

It is going to take a couple of extra cycles to seek out the attention-grabbing use instances for AI, and there’s no cause to assume Microsoft is the one firm that may innovate right here. All of which locations us firmly in the course of expectations – very long time structural demand will develop, however there might be ups and downs earlier than we get there, and possibly no post-apocalyptic zombies to fret about.

[ad_2]

Source